因果推断核心概念

我们将通过一个贯穿始终的简单例子来讲解:评估一个广告(比如一封营销邮件)对用户购买行为的影响。

- 干预(Treatment): 发送营销邮件。

- W = 1:用户被分配到处理组(计划发送邮件)。

- W = 0:用户被分配到对照组(计划不发送邮件)。

- 结果(Outcome): 是否购买。Y = 1(购买),Y = 0(未购买)。

- 核心问题:我们关心的不是“收到邮件的用户购买率有多高”,而是“邮件导致了多少额外的购买”?这就是因果效应。

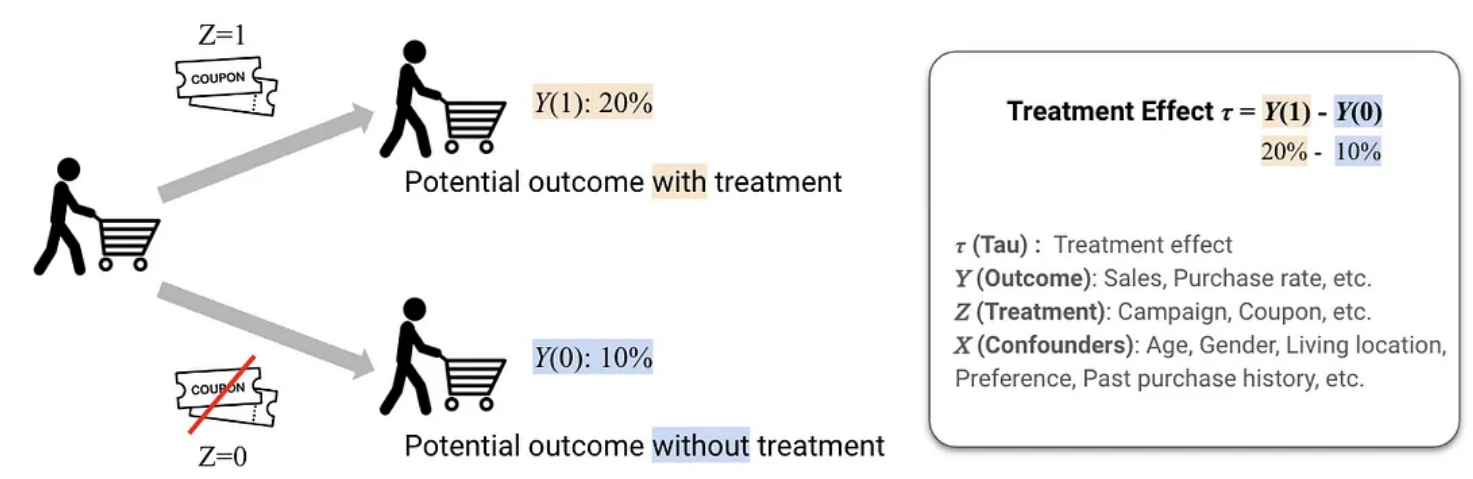

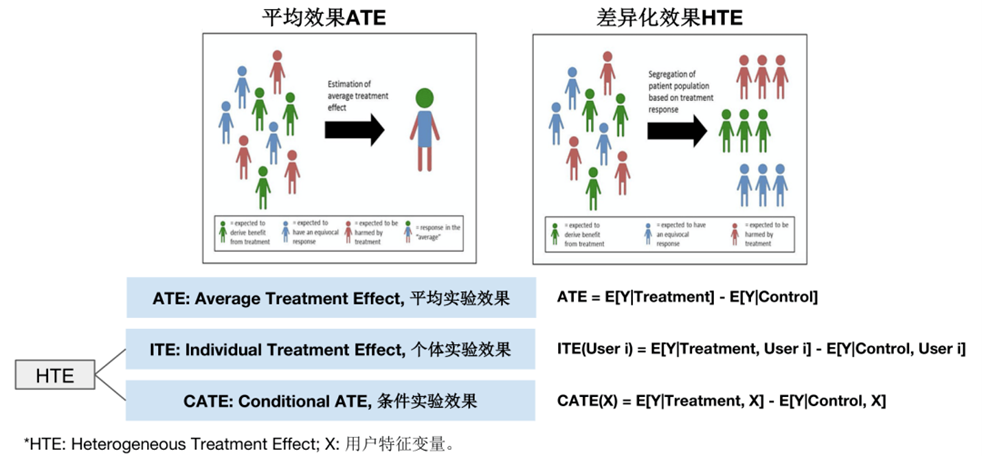

ATE – 平均处理效应 (Average Treatment Effect)

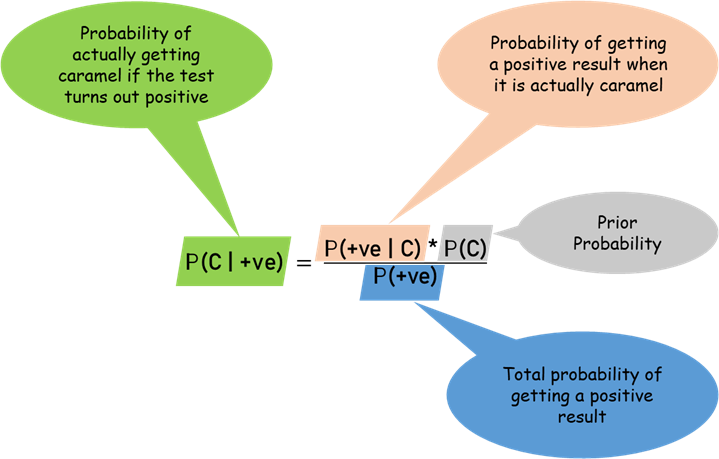

定义:干预(Treatment)对整个研究人群的平均影响。它回答了“如果我们对所有人施加干预,相比于对所有人都不施加干预,平均结果会差多少?”

公式:ATE = E[Y(1) – Y(0)] = E[Y(1)] – E[Y(0)]

- Y(1):如果接受处理后的潜在结果。

- Y(0):如果未接受处理后的潜在结果。

计算:在完美随机实验中(即分组是随机的),ATE可以简单地估算为:

ATE = (处理组购买率) – (对照组购买率)

例子:

- 处理组(收到邮件)的购买率 = 12%

- 对照组(未收邮件)的购买率 = 8%

- ATE = 12% – 8% = 4%

- 结论:这封营销邮件使得整个用户群体的购买率平均提升了4个百分点。

重要性:ATE是因果推断中最基础、最广泛关注的指标,它给出了干预的整体效果。

ATT – 处理组的平均处理效应 (Average Treatment Effect on the Treated)

定义:干预(Treatment)对实际接受了处理的人群的平均影响。它回答了“对那些实际被处理的人,处理比不处理平均多带来了多少效果?”

公式:ATT = E[Y(1) – Y(0) | W = 1]

与ATE的区别:

- ATE关心的是“对所有人的平均效果”。

- ATT关心的是“对被处理的人的平均效果”。

例子:继续使用邮件营销的例子。假设有些用户邮箱已失效,邮件被退回,他们实际上并未“暴露”在干预下。

- 处理组中实际收到邮件的用户购买率 = 15%

- 假设我们能知道,如果这些同样的人没收到邮件,他们的购买率会是 9%

- ATT = 15% – 9% = 6%

- 结论:这封邮件对那些实际收到它的人,平均产生了6个百分点的购买提升。

重要性:当我们更关心干预对“被触达”群体的效果时(例如计算已花费成本的回报率),ATT比ATE更具参考价值。在观察性研究中,ATT通常比ATE更容易估计。

CATE – 条件平均处理效应 (Conditional Average Treatment Effect)

定义:干预对具有特定特征(X)的子人群的平均影响。它是Uplift Modeling(增量建模)的核心目标。

公式:CATE = E[Y(1) – Y(0) | X = x]

- X是特征变量,比如用户年龄、历史消费等。

与ATE/ATT的区别:

- ATE/ATT给出一个单一的平均值。

- CATE允许效应因人而异,它输出的是一个关于特征 X的函数。

例子:我们想知道邮件对“年轻用户”和“老用户”的效果是否不同。

- 对特征 X(年龄 < 30)的子人群计算:CATE_年轻 = (年轻处理组购买率) – (年轻对照组购买率) = 15% – 5% = 10%

- 对特征 X(年龄 ≥ 30)的子人群计算:CATE_年长 = (年长处理组购买率) – (年长对照组购买率) = 6% – 7% = -1%

- 结论:营销邮件对年轻用户非常有效,平均提升10个百分点的购买率;但对年长用户略有负面效果。因此,最优策略是只给年轻用户发送邮件。

重要性:CATE是实现个性化策略(精准营销、个性化治疗等)的理论基础。CausalML中的模型(如Meta-Learners, Uplift Trees)主要就是为了预测CATE。

ITT – 意向性处理效应 (Intent-to-Treat Effect)

定义:基于最初的干预分配(而不是实际接受情况)来分析效果。它是ATE的一个特例,衡量的是“执行策略”本身的效果。

计算:ITT = E[Y | W=1] – E[Y | W=0](注意:这里只看初始分配 W)

例子:在邮件实验中,即使分配了邮件但有些没发送成功(邮箱错误),我们依然按最初分配的组别来分析:

- 所有被分配到处理组的用户(包括没收到邮件的)购买率 = 10%

- 所有被分配到对照组的用户购买率 = 8%

- ITT = 10% – 8% = 2%

- 结论:“执行发送邮件这个策略”平均为整个群体带来了2个百分点的购买提升。

重要性:ITT非常稳健,因为它严格遵守随机分配,避免了因“未依从”(如邮件发送失败)带来的混淆。它衡量的是策略的执行效果,而不是治疗本身的生物学或心理学效果。

TOT/LATE – 处理效应与局部平均处理效应 (Effect of Treatment on the Treated / Local Average Treatment Effect)

问题背景:当存在非依从性时(即分配了但未接受处理,如 W=1但未曝光),直接比较 Y会产生偏差。我们需要估计实际接受处理的影响。

工具变量法:为了解决非依从性问题,我们将初始分配 Z(即Treatment) 作为一个工具变量(IV),来估计实际处理 D(即Exposure) 对结果 Y的影响。

TOT/LATE定义:工具变量法估计出的效应,代表的是对依从者(Compliers)的平均效应。依从者是指那些行为完全由初始分配决定的人(即 Z=1则 D=1, Z=0则 D=0)。

计算(Wald估计量):LATE = (E[Y | Z=1] – E[Y | Z=0]) / (E[D | Z=1] – E[D | Z=0])

例子(对应Criteo数据集):

- Z(工具变量): treatment(计划分配)

- D(实际处理): exposure(实际曝光)

- Y(结果): conversion(转化)

- 计算:

- 分子:(分配至处理组的用户的转化率) – (分配至对照组的用户的转化率) = ITT

- 分母:(分配至处理组的用户的广告曝光率) – (分配至对照组的用户的广告曝光率)

- 假设:

- 处理组转化率 (Y|Z=1) = 12%,对照组转化率 (Y|Z=0) = 8% -> 分子 ITT = 4%

- 处理组曝光率 (D|Z=1) = 80%(有20%的人没加载出广告),对照组曝光率 (D|Z=0) = 5%(有5%的人通过其他途径看到了广告) -> 分母 = 80% – 5% = 75%

- LATE = 4% / 75% ≈ 5.33%

- 结论:广告曝光对那些依从者(即:被分配看广告且成功看到的人 vs 被分配不看广告就真的没看到的人)的真实因果效应是提升33个百分点的转化率。

重要性:LATE提供了一个在存在非依从性的情况下,估计实际处理效应的有效方法。它比ITT更接近“治疗”的纯效果,但需要注意的是,它只适用于“依从者”这个子群体。

总结与对比

因果推断核心概念总结与对比表

| 概念 | 英文全称 | 核心问题 | 公式/估算 | 特点与应用场景 |

| ATE | Average Treatment Effect | 干预对整个目标群体的平均效果? | ATE = E[Y(1) – Y(0)] | 最基础的指标,用于评估干预的整体价值。 |

| ATT | Average Treatment Effect on the Treated | 干预对实际接受了处理的人群的平均效果? | ATT = E[Y(1) – Y(0)|W=1] | 关注干预对实际触达群体的效果,常用于计算投资回报率(ROI)。 |

| CATE | Conditional Average Treatment Effect | 干预对具有特定特征的子人群的平均效果? | CATE = E[Y(1) – Y(0)|X=x] | 个性化策略的核心,Uplift Modeling的目标,用于识别异质性效应。 |

| ITT | Intent-to-Treat Effect | 执行分配策略本身(而非实际处理)的平均效果? | ITT = E[Y|Z=1] – E[Y|Z=0] | 衡量策略执行的效果,非常稳健,严格遵守随机分配原则。 |

| LATE | Local Average Treatment Effect | 干预对依从者(其行为完全由初始分配决定的人)的平均效果? | LATE=\frac{E[Y|Z=1]-E[Y|Z=0]}{E[D|Z=1]-E[D|Z=0]} | 使用工具变量法(IV) 估计,用于解决非依从性问题,估算实际处理的“纯净”效应。 |

注:符号说明

- Y(1), Y(0): 潜在结果(Potential Outcomes)

- W: 处理指示变量(Treatment Indicator)

- Z: 工具变量(Instrumental Variable),通常是初始分配

- D: 实际接受的处理(Actual Treatment)

- X: 协变量/特征(Covariates/Features)

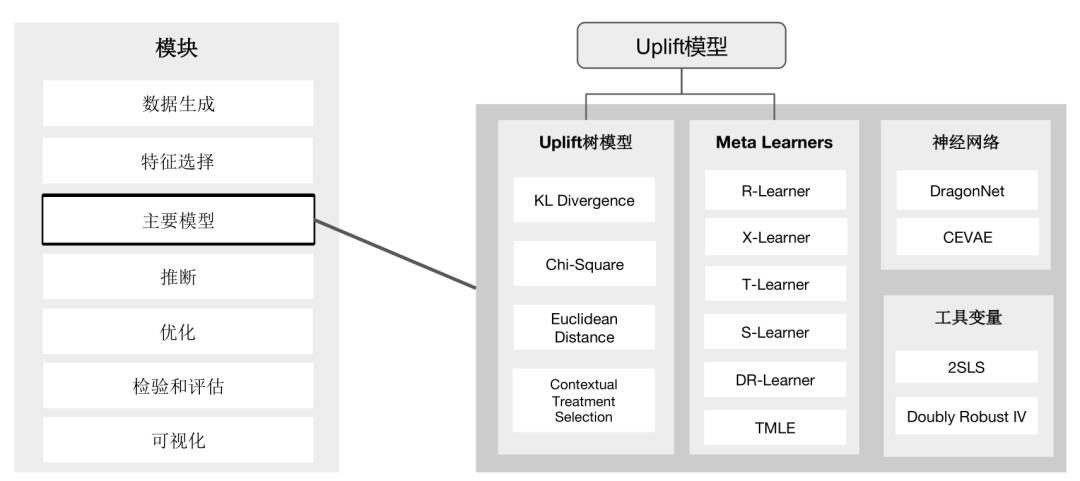

CausalML简介

CausalML 是一个基于 Python 的开源工具包,它巧妙地将机器学习的预测能力与因果推断的逻辑框架结合在一起,旨在从数据中识别出真正的因果关系,而不仅仅是相关关系。

项目概览

CausalML 的核心任务是估计干预措施(例如发放优惠券、投放广告)的因果效应,特别关注于条件平均处理效应(CATE) 或个体处理效应(ITE)。这意味着它不仅能回答“这个广告活动平均能提升多少销售额?”,更能回答“向哪位特定用户投放这个广告,效果最好?”,从而实现真正的个性化决策。

该项目最初由 Uber 的数据科学团队开发,用以解决其实际的业务问题,之后开源并持续维护,目前已成为因果机器学习领域一个非常受欢迎的工具。

核心功能与方法

CausalML 作为一个功能丰富的因果推断工具包,提供了多种主流算法来估计干预措施的因果效应。为了帮助你快速了解其算法体系,下面这个表格汇总了核心的算法类别及其代表方法。

| 算法类别 | 代表方法 | 主要特点 / 适用场景 |

| Meta-Learners(元学习器) | S-Learner, T-Learner, X-Learner, R-Learner, DR-Learner | 框架灵活,可以复用现有的机器学习模型(如线性模型、XGBoost等)来估计条件平均处理效应(CATE) |

| 基于树的算法 | Uplift Random Forests (基于KL散度、欧氏距离、卡方), Contextual Treatment Selection (CTS) Trees, Causal Forest | 专门为因果推断设计,通过特定的分裂准则(如提升差异)直接识别对干预敏感的群体 |

| 工具变量算法 | 两阶段最小二乘法 (2SLS), 双稳健工具变量学习器 (DRIV) | 适用于存在未观测混杂因素的情况,需要有一个有效的工具变量(Instrumental Variable) |

| 基于神经网络的方法 | CEVAE (Causal Effect Variational Autoencoder), DragonNet | 利用神经网络的强大表示能力处理复杂的非线性关系和高维数据 |

| 处理效果优化算法 | 反事实单元选择, 反事实价值估计器 | 超越效应估计,直接用于决策优化,例如在营销中最大化投资回报率(ROI) |

基于树的算法 (Tree-based algorithms)

这类算法专门为估计异质性处理效应(HTE)或提升(Uplift)而设计,通过改进决策树的分裂准则来直接优化因果效应的识别。

基于分布差异的森林:

- KL 散度提升随机森林:使用KL散度来衡量干预组和对照组在节点中的分布差异,并以此作为分裂准则。

- 欧氏距离提升随机森林:使用欧氏距离作为分布差异的度量。

- 卡方散度提升随机森林:使用卡方散度作为分布差异的度量。

基于特定准则的森林:

- 上下文处理选择提升随机森林:专注于识别在不同上下文(特征)下最优的处理方案。

- ΔΔP 准则提升随机森林:适用于二分树和二分类问题,直接优化处理组和对照组之间响应率之差。

- IDDP 准则提升随机森林:同样适用于二分树和二分类问题。

经典因果树:

- 交互树:适用于二分树和二分类问题,用于识别处理效应异质性。

- 因果推断树:适用于二分树和二分类问题,是因果推断中的基础树模型。

元学习器算法 (Meta-learner algorithms)

这类方法将因果估计问题转化为标准的有监督学习问题,可以灵活地套用任何机器学习模型(如XGBoost、随机森林、线性模型等)作为其基学习器。

- S-Learner:将处理指示变量作为一个特征,训练单个模型。

- T-Learner:为处理组和对照组分别训练两个独立的模型。

- X-Learner:一种更复杂的元学习器,结合了T-Learner的思想并进行交叉估计,通常在处理效应存在异质性时表现更好。

- R-Learner:基于稳健损失函数和交叉拟合的框架,旨在减少偏差和过拟合。

- 双稳健学习器:结合了处理倾向评分和结果回归模型,只要两者中有一个估计正确,就能得到无偏的效应估计,增强了模型的稳健性。

工具变量算法 (Instrumental variables algorithms)

这类方法用于处理存在未观测混杂因子的情况,但需要一个有效的工具变量。

- 两阶段最小二乘法:最经典的工具变量方法,通过两个阶段的回归来估计因果效应。

- 双稳健工具变量学习器:将工具变量法的思想与双稳健估计相结合,提高了估计的稳健性。

基于神经网络的算法 (Neural network based algorithms)

利用深度学习的强大表示能力来处理复杂的非线性关系和高度数据。

- CEVAE:因果效应变分自编码器,一种结合了变分推理和神经网络的方法,适用于存在未观测混淆因子的场景。

- DragonNet:一种专门的神经网络结构,通过自适应参数估计来提高因果效应估计的效率和精度。

处理效果优化算法 (Treatment optimization algorithms)

这类方法超越了单纯的效应估计,直接聚焦于做出最优决策。

- 反事实单元选择:用于识别哪些个体单元最应该接受处理,以最大化整体效果(例如,选择哪些客户进行营销以最大化ROI)。

- 反事实价值估计器:用于估计在不同处理决策下所能带来的预期价值,从而选择价值最大的决策。

总结与选择指南

为了更直观地展示这些算法的特点和适用场景,可以参考以下表格:

| 算法类别 | 核心思想 | 典型应用场景 | 注意事项 |

| 基于树的算法 | 直接优化因果分裂准则,识别异质性群体 | 精准营销、用户分群、寻找敏感人群 | 部分算法仅支持二分类问题 |

| 元学习器 | 将因果问题转化为预测问题,复用现有ML模型 | 通用性强,可作为大部分因果问题的基线模型 | 需谨慎选择基学习器,S-Learner可能低估效应 |

| 工具变量法 | 利用工具变量解决未观测混淆问题 | 经济学、社会科学(如:政策评估)、广告投放 | 核心挑战在于找到一个有效的工具变量 |

| 神经网络法 | 用深度学习处理高维复杂数据 | 图像、文本等复杂特征下的因果推断 | 需要大量数据,计算资源消耗大,可解释性差 |

| 效果优化 | 直接输出最优决策,而非仅仅效应值 | 自动化决策系统,如自动分配优惠券或广告 | 目标是最优行动,而非理解效应本身 |

与其他库的对比

定位与设计理念对比

| 库名称 | 核心定位 | 优势 | 局限性 |

| CausalML | 专注提升建模(Uplift)和异质处理效应(HTE),优化营销/干预策略 | 丰富的Meta-Learner、树模型和深度学习算法;强工业场景适配 | 缺乏原生分布式支持;安装依赖复杂(如TensorFlow版本冲突) |

| EconML | 经济学导向的因果推断,覆盖DML、IV、DR等复杂方法,支持政策评估和异质性分析 | 算法全面(如Orthogonal Forests、Deep IV);解释工具完善(SHAP、Policy Tree) | 大数据性能不足;学习曲线较陡峭 |

| DoWhy | 因果推断流程标准化,强调因果图建模和识别(Identification-Estimation-Refutation) | 结构清晰,适合因果推理初学者;支持混淆变量敏感性分析 | 高级算法较少;依赖外部库(如Statsmodels)进行估计 |

| Fast-Causal-Inference | 超大规模数据因果分析,基于OLAP引擎(ClickHouse/StarRocks) | 亿级数据秒级计算;SQL交互降低使用门槛 | 部署复杂;功能聚焦基础统计方法(OLS、Matching) |

关键差异:

- CausalML和EconML侧重机器学习融合,但CausalML更偏向业务决策优化,EconML更学术化。

- DoWhy强调因果逻辑链完整性,Fast-Causal-Inference解决工程性能瓶颈。

- 相比EconML(微软开发),CausalML 更注重基于树的模型和元学习器。

- 与DoWhy(基于因果图模型)互补,后者侧重因果发现,而 CausalML 侧重效应估计。

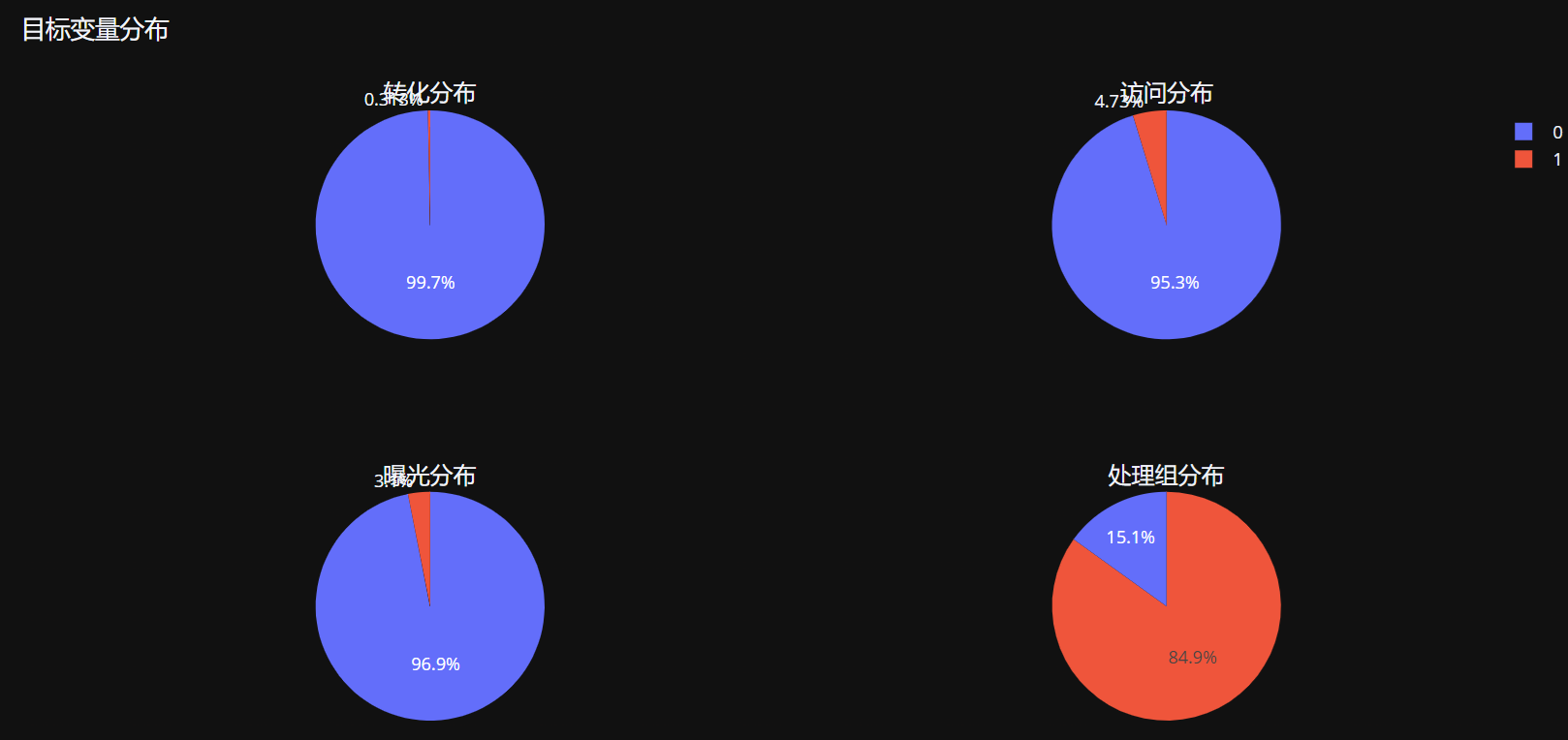

数据集简介

数据集概述

- 发布机构:Criteo AI Lab(全球效果营销科技公司)。

- 伴随论文:”A Large Scale Benchmark for Uplift Modeling”

- 下载地址:Criteo Uplift Prediction Dataset – Criteo AI Lab

- 规模:1,300万行 × 16列(13M用户行为记录)

- 关键字段:

- f0-f11:12个匿名化特征(浮点型)

- treatment:是否接受广告(1=实验组,0=对照组)

- conversion:是否转化(二元标签)

- visit:是否访问网站(二元标签)

- exposure:是否实际曝光广告(二元标签)

核心目标:识别广告对用户行为的增量效果(即用户因广告而产生的新转化)。

重点字段说明

treatment 和 exposure 是两个紧密相关但本质不同的字段,它们共同反映广告实验的 理想设计 与 实际执行 之间的差异。

- treatment:随机分配的组别标签,用户被随机分配到处理组(1)或对照组(0)。处理组计划被展示广告,对照组计划不展示广告。

- exposure:实际广告曝光状态,用户是否真正看到广告(1=实际曝光,0=未曝光)。受现实因素影响(如用户未登录、广告加载失败等)。

在实际应用 Criteo 数据集进行增量建模(Uplift Modeling)或因果推断时,需根据目标选择不同策略处理 treatment 和 exposure 字段。

| 目标 | 核心字段 | 方法选择 |

| 评估广告分配策略效果(计划干预的影响) | treatment | ITT(Intent-to-Treat) |

| 评估广告曝光真实效果(实际触达的因果效应) | exposure | CACE/LATE(工具变量法) |

| 预测用户对广告的响应敏感性 | 二者结合 | 异质性建模 |

数据探索

import pandas as pd

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import plotly.figure_factory as ff

from scipy import stats

import warnings

warnings.filterwarnings('ignore')

# 加载数据(这里假设已经下载了Criteo数据集)

df = pd.read_csv("data/criteo-data.csv")

# 显示数据集基本信息

print("数据集形状:", df.shape)

print("\n前5行数据:")

print(df.head())

print("\n列信息:")

print(df.info())

print("\n描述性统计:")

print(df.describe())

# 定义特征列表

numeric_features = [f'f{i}' for i in range(12)] # f0到f11

target_variables = ['conversion', 'visit', 'exposure']

treatment_var = 'treatment'

# 1. 缺失值分析

missing_values = df.isnull().sum()

missing_percentage = (missing_values / len(df)) * 100

# 将百分比转换为小数(0-1范围)

missing_percentage_decimal = missing_percentage / 100

fig = px.bar(x=missing_percentage.index, y=missing_percentage_decimal.values,

title='各特征缺失值百分比',

labels={'x': '特征', 'y': '缺失值百分比'})

# 设置 y 轴范围为 0 到 1,并格式化为百分比显示

fig.update_layout(

xaxis_tickangle=-45,

yaxis=dict(

range=[0, 1],

tickformat='.0%' # 将刻度显示为百分比格式

)

)

fig.show()

# 2. 目标变量分析

fig = make_subplots(

rows=2, cols=2,

subplot_titles=('转化分布', '访问分布', '曝光分布', '处理组分布'),

specs=[[{"type": "pie"}, {"type": "pie"}],

[{"type": "pie"}, {"type": "pie"}]]

)

conversion_counts = df['conversion'].value_counts()

fig.add_trace(go.Pie(values=conversion_counts.values, labels=conversion_counts.index, name="转化"), 1, 1)

visit_counts = df['visit'].value_counts()

fig.add_trace(go.Pie(values=visit_counts.values, labels=visit_counts.index, name="访问"), 1, 2)

exposure_counts = df['exposure'].value_counts()

fig.add_trace(go.Pie(values=exposure_counts.values, labels=exposure_counts.index, name="曝光"), 2, 1)

treatment_counts = df['treatment'].value_counts()

fig.add_trace(go.Pie(values=treatment_counts.values, labels=treatment_counts.index, name="处理组"), 2, 2)

fig.update_layout(height=800, title_text="目标变量分布")

fig.show()

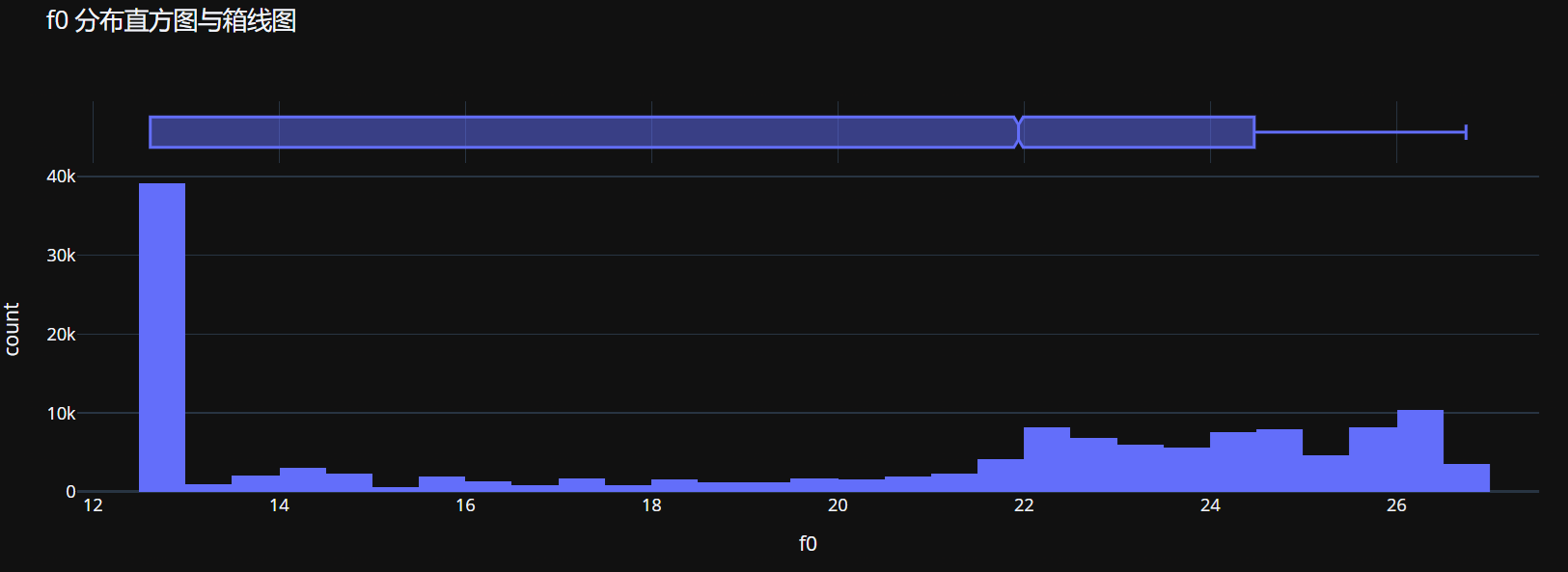

# 3. 数值特征单变量分析

# 3.1 分布直方图

for i, feature in enumerate(numeric_features[:1]): # 只分析前1个特征

fig = px.histogram(df, x=feature, marginal="box",

title=f'{feature} 分布直方图与箱线图',

nbins=50)

fig.show()

# 3.2 统计摘要表

feature_stats = []

for feature in numeric_features:

stats_data = {

'Feature': feature,

'Mean': df[feature].mean(),

'Median': df[feature].median(),

'Std': df[feature].std(),

'Min': df[feature].min(),

'Max': df[feature].max(),

'Skewness': df[feature].skew(),

'Kurtosis': df[feature].kurtosis(),

'Missing': df[feature].isnull().sum()

}

feature_stats.append(stats_data)

feature_stats_df = pd.DataFrame(feature_stats)

print("数值特征统计摘要:")

print(feature_stats_df)

# 3.3 异常值检测

outlier_stats = []

for feature in numeric_features:

Q1 = df[feature].quantile(0.25)

Q3 = df[feature].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

outliers = df[(df[feature] < lower_bound) | (df[feature] > upper_bound)].shape[0]

outlier_percentage = (outliers / len(df)) * 100

outlier_stats.append({

'Feature': feature,

'Outliers': outliers,

'Outlier_Percentage': outlier_percentage,

'Lower_Bound': lower_bound,

'Upper_Bound': upper_bound

})

outlier_stats_df = pd.DataFrame(outlier_stats)

print("\n异常值统计:")

print(outlier_stats_df)

# 4. 特征与目标变量关系分析

# 4.1 数值特征与转化率的关系

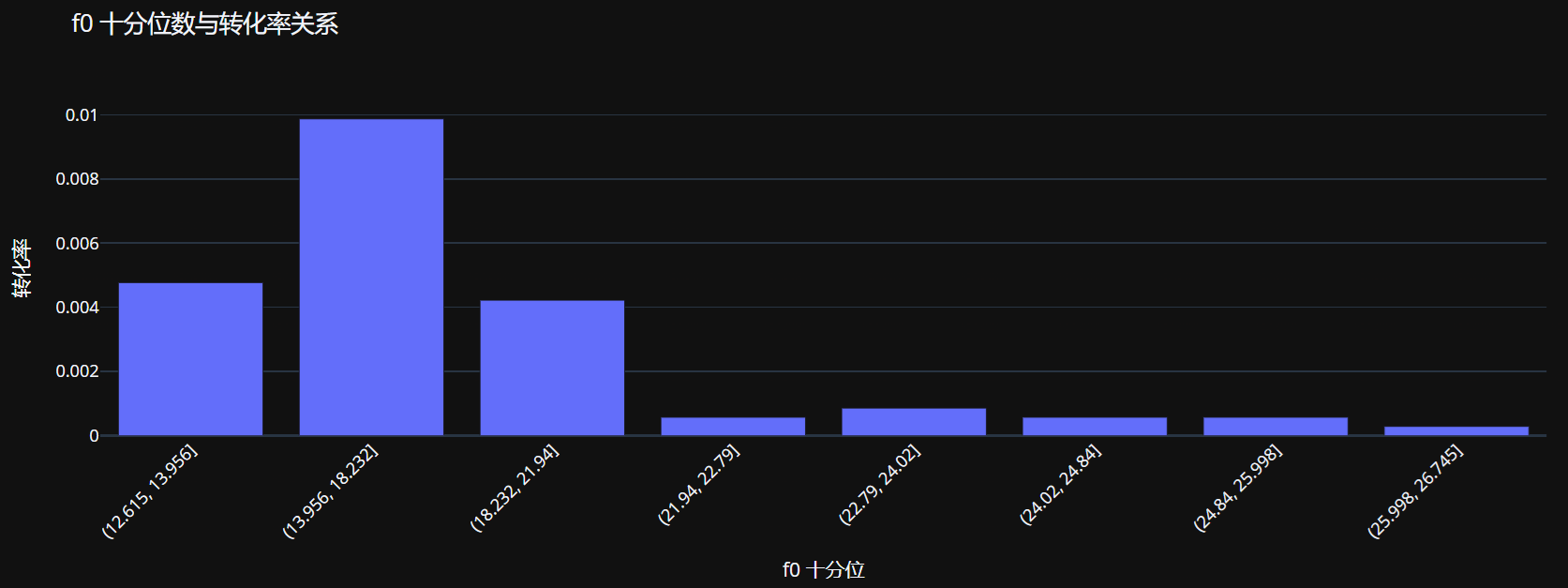

for feature in numeric_features[:1]: # 只分析前1个特征

# 创建十分位数分箱

df['decile'] = pd.qcut(df[feature], q=10, duplicates='drop')

# 将 Interval 对象转换为字符串

df['decile_str'] = df['decile'].astype(str)

decile_ctr = df.groupby('decile_str')['conversion'].mean().reset_index()

fig = px.bar(decile_ctr, x='decile_str', y='conversion',

title=f'{feature} 十分位数与转化率关系',

labels={'decile_str': f'{feature} 十分位', 'conversion': '转化率'})

fig.update_layout(xaxis_tickangle=-45)

fig.show()

# 散点图(采样以避免过度绘制)

sample_df = df.sample(n=min(5000, len(df)), random_state=42)

fig = px.scatter(sample_df, x=feature, y='conversion',

trendline="lowess",

title=f'{feature} 与转化率关系散点图',

opacity=0.5)

fig.show()

# 4.2 特征与处理组交互效应

for feature in numeric_features[:1]:

# 处理组和对照组的特征分布对比

fig = px.box(df, x='treatment', y=feature,

title=f'{feature} 在处理组和对照组中的分布')

fig.show()

# 计算处理组和对照组中特征与转化的相关性差异

treatment_corr = df[df['treatment'] == 1][[feature, 'conversion']].corr().iloc[0, 1]

control_corr = df[df['treatment'] == 0][[feature, 'conversion']].corr().iloc[0, 1]

print(f"{feature} 与转化的相关性 - 处理组: {treatment_corr:.3f}, 对照组: {control_corr:.3f}")

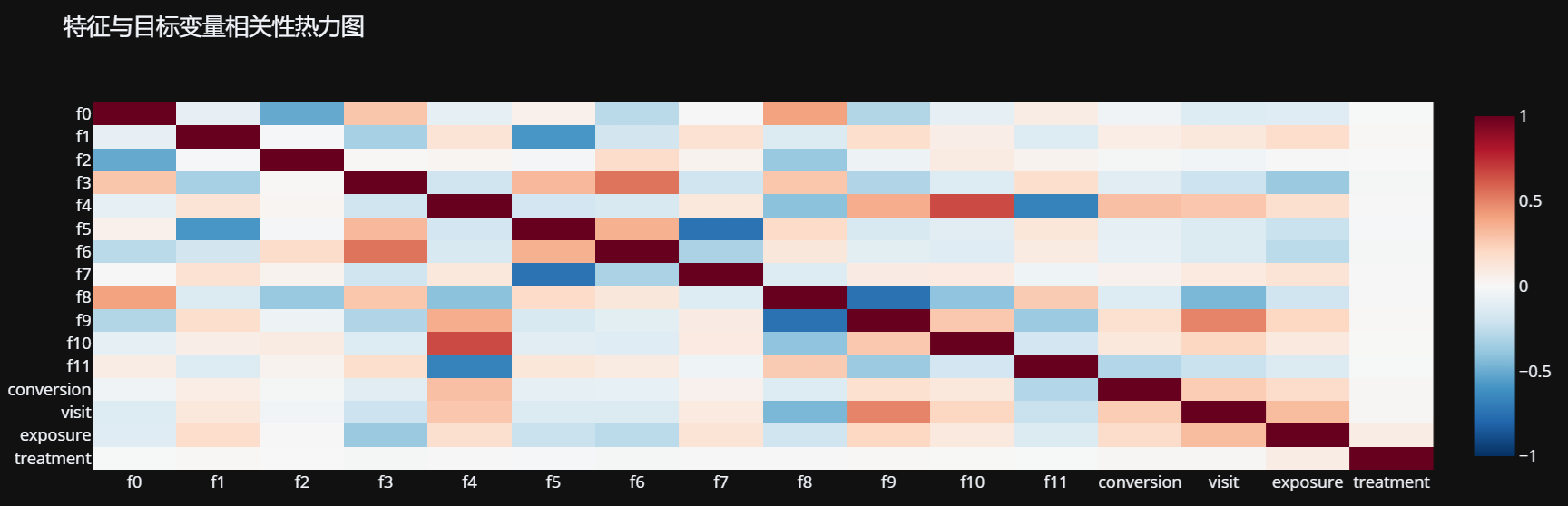

# 5. 多变量分析

# 5.1 相关性矩阵

corr_matrix = df[numeric_features + target_variables + [treatment_var]].corr()

fig = px.imshow(corr_matrix,

title='特征与目标变量相关性热力图',

aspect="auto",

color_continuous_scale='RdBu_r',

zmin=-1, zmax=1)

fig.show()

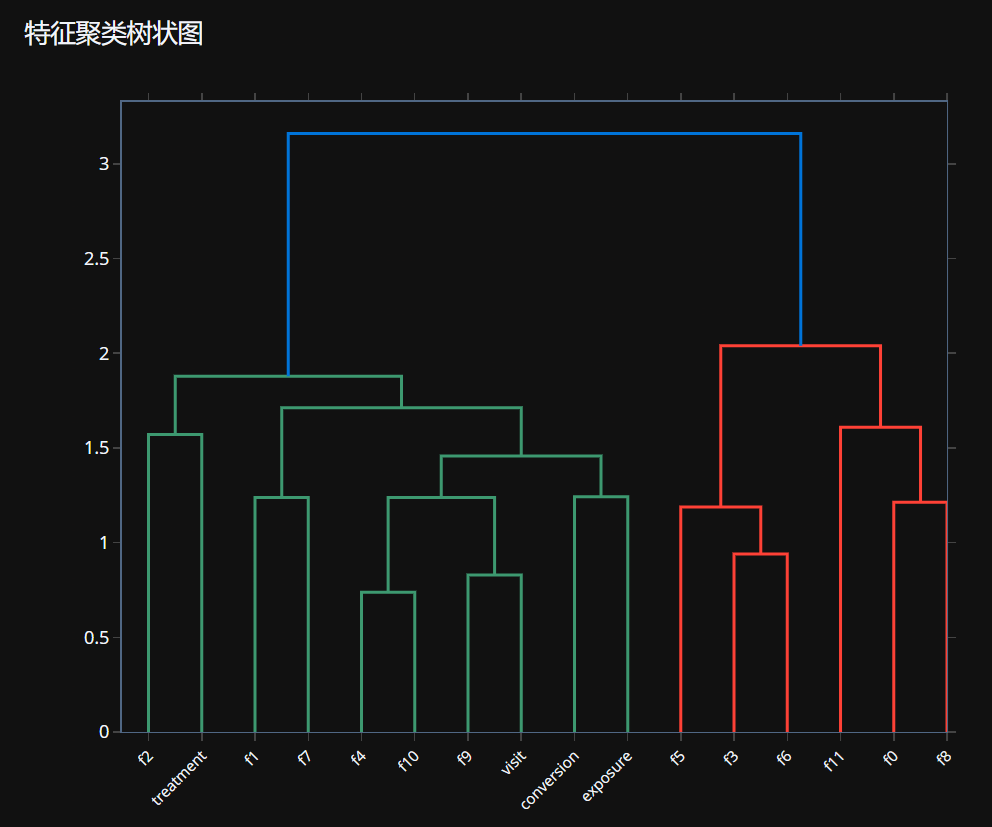

# 5.2.1 特征聚类树状图

# 创建树状图

dendro = ff.create_dendrogram(corr_matrix,

orientation='bottom',

labels=corr_matrix.columns.tolist()) # 明确设置标签

# 更新树状图布局

dendro.update_layout(

height=600,

title_text="特征聚类树状图",

showlegend=False,

margin=dict(l=100, r=50, t=80, b=100)

)

# 调整x轴标签 - 旋转并确保显示

dendro.update_xaxes(

tickangle=-45, # 旋转标签避免重叠

tickfont=dict(size=10) # 调整字体大小

)

# 显示树状图

dendro.show()

# 5.2.2 特征相关性热力图(下方图片)

# 创建热力图

heatmap = go.Heatmap(z=corr_matrix.values,

x=corr_matrix.columns,

y=corr_matrix.index,

colorscale='RdBu_r',

zmin=-1, zmax=1)

# 创建独立的热力图图表

fig_heatmap = go.Figure(data=[heatmap])

# 更新热力图布局

fig_heatmap.update_layout(

title_text="特征相关性热力图",

margin=dict(l=100, r=50, t=80, b=100)

)

# 反转热力图的y轴以匹配树状图的顺序

fig_heatmap.update_yaxes(autorange="reversed")

# 显示热力图

fig_heatmap.show()

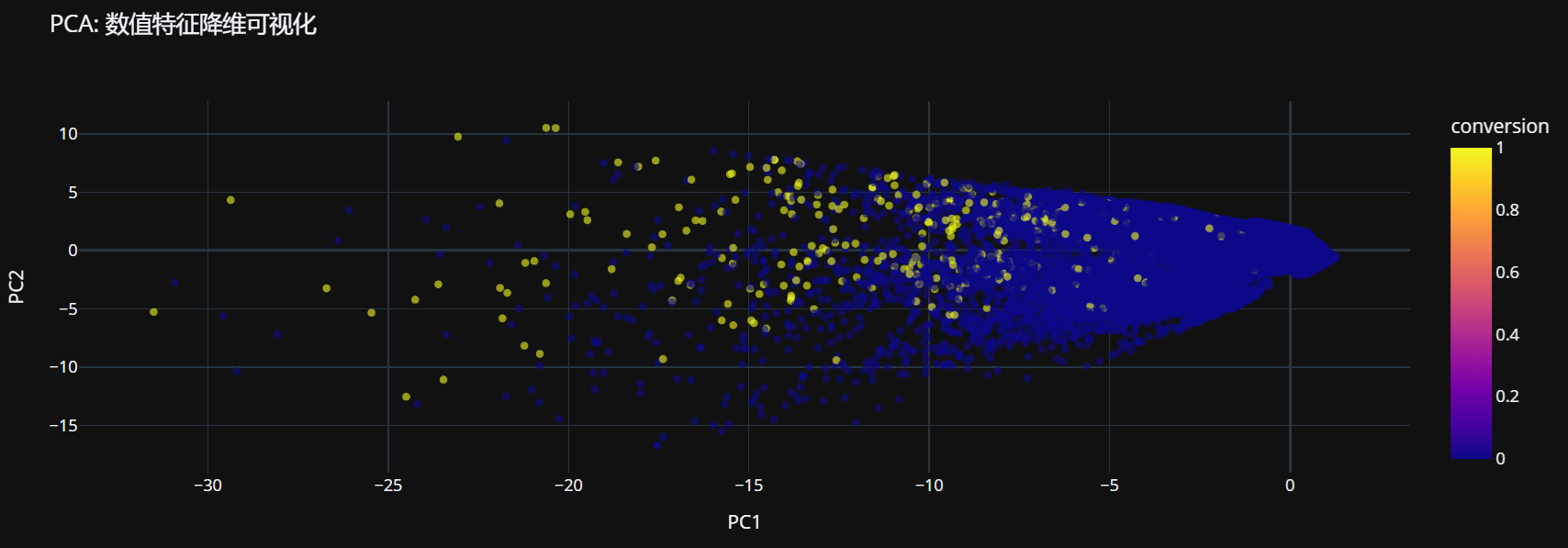

# 5.3 主成分分析 (PCA) 可视化

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

# 选择数值特征进行PCA

X = df[numeric_features].fillna(df[numeric_features].median())

# 标准化数据

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# 应用PCA

pca = PCA(n_components=2)

principal_components = pca.fit_transform(X_scaled)

# 创建PCA结果 DataFrame

pca_df = pd.DataFrame(data=principal_components,

columns=['PC1', 'PC2'])

pca_df['conversion'] = df['conversion'].values

pca_df['treatment'] = df['treatment'].values

# 可视化PCA结果

fig = px.scatter(pca_df, x='PC1', y='PC2', color='conversion',

title='PCA: 数值特征降维可视化',

opacity=0.6)

fig.show()

# 6. 特征重要性分析

# 6.1 基于随机森林的特征重要性

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

# 准备数据

X = df[numeric_features].fillna(df[numeric_features].median())

y = df['conversion']

# 划分训练测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# 训练随机森林模型

rf = RandomForestClassifier(n_estimators=100, random_state=42)

rf.fit(X_train, y_train)

# 获取特征重要性

feature_importance = pd.DataFrame({

'feature': numeric_features,

'importance': rf.feature_importances_

}).sort_values('importance', ascending=False)

# 可视化特征重要性

fig = px.bar(feature_importance, x='importance', y='feature', orientation='h',

title='基于随机森林的特征重要性排序',

labels={'importance': '重要性', 'feature': '特征'})

fig.show()

# 6.2 基于互信息的特征重要性

from sklearn.feature_selection import mutual_info_classif

# 计算互信息

mi_scores = mutual_info_classif(X, y, random_state=42)

mi_df = pd.DataFrame({

'feature': numeric_features,

'mi_score': mi_scores

}).sort_values('mi_score', ascending=False)

# 可视化互信息得分

fig = px.bar(mi_df, x='mi_score', y='feature', orientation='h',

title='基于互信息的特征重要性排序',

labels={'mi_score': '互信息得分', 'feature': '特征'})

fig.show()

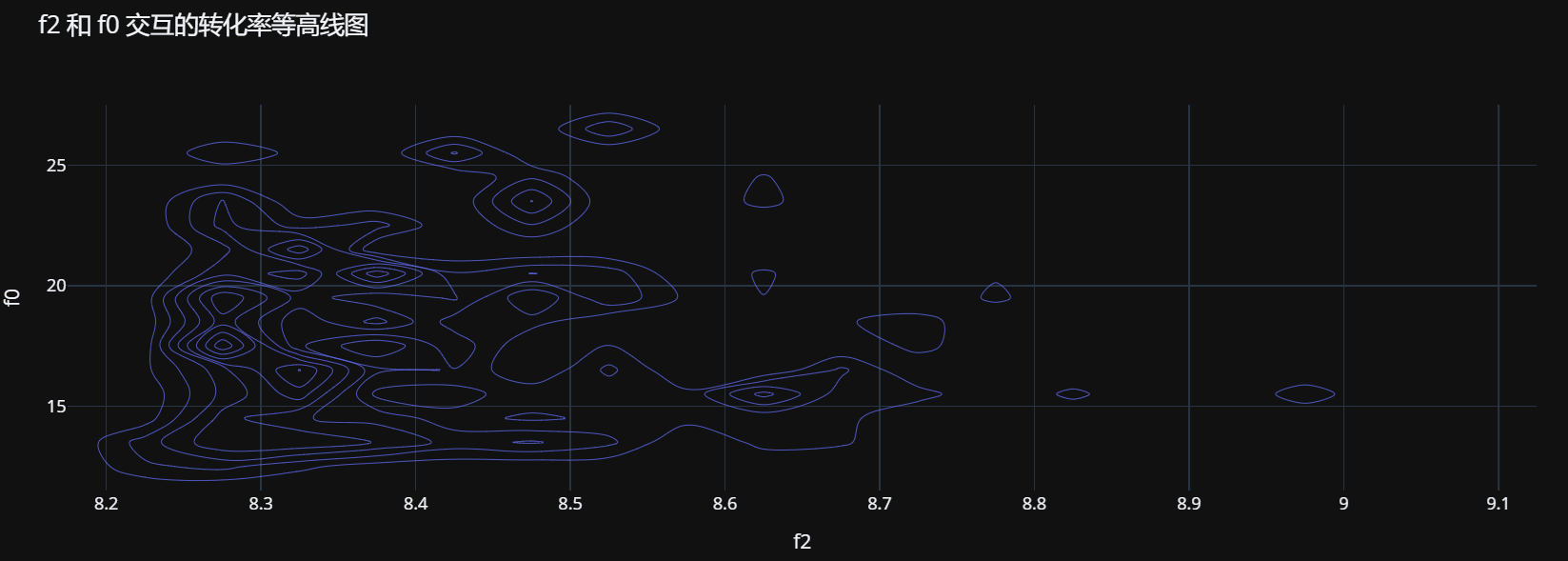

# 7. 特征交互效应分析

# 7.1 选择两个最重要的特征分析交互效应

top_features = feature_importance.head(2)['feature'].values

if len(top_features) == 2:

feature1, feature2 = top_features

# 创建3D散点图

sample_df = df.sample(n=min(3000, len(df)), random_state=42)

fig = px.scatter_3d(sample_df, x=feature1, y=feature2, z='conversion',

color='conversion', opacity=0.7,

title=f'{feature1} 和 {feature2} 与转化率的交互效应')

fig.show()

# 创建二维等高线图

fig = px.density_contour(df, x=feature1, y=feature2, z='conversion',

histfunc='avg',

title=f'{feature1} 和 {feature2} 交互的转化率等高线图')

fig.show()

# 9. 高级特征洞察

# 9.1 特征分布对比:转化用户 vs 非转化用户

for feature in numeric_features[:1]:

converted = df[df['conversion'] == 1][feature]

not_converted = df[df['conversion'] == 0][feature]

# 创建分布对比图

fig = ff.create_distplot([converted, not_converted],

['转化用户', '非转化用户'],

bin_size=0.2, show_rug=False)

fig.update_layout(title_text=f'{feature} 在转化与非转化用户中的分布对比')

fig.show()

# 执行t检验

t_stat, p_value = stats.ttest_ind(converted, not_converted, nan_policy='omit')

print(f"{feature} 的t检验: t={t_stat:.3f}, p={p_value:.3f}")

# 9.2 特征工程建议

print("\n特征工程建议:")

for feature in numeric_features:

skewness = df[feature].skew()

if abs(skewness) > 1:

print(f"- {feature} 偏度较高 ({skewness:.2f}),考虑进行对数变换或Box-Cox变换")

# 检查特征是否具有大量零值

zero_percentage = (df[feature] == 0).sum() / len(df) * 100

if zero_percentage > 50:

print(f"- {feature} 有 {zero_percentage:.1f}% 的零值,考虑创建二元标志特征")

# 10. 保存分析结果

feature_stats_df.to_csv('feature_statistics.csv', index=False)

outlier_stats_df.to_csv('outlier_statistics.csv', index=False)

feature_importance.to_csv('feature_importance.csv', index=False)

print("特征探索完成! 详细统计结果已保存到CSV文件。")

# 创建2x2的子图布局

fig = make_subplots(

rows=2, cols=2,

subplot_titles=('Treatment Distribution (1=Group)',

'Exposure Distribution (1=Exposed)',

'Conversion Distribution (1=Converted)',

'Visit Distribution (1=Visited)')

)

# 计算每个变量的计数

treatment_counts = df['treatment'].value_counts().sort_index()

exposure_counts = df['exposure'].value_counts().sort_index()

conversion_counts = df['conversion'].value_counts().sort_index()

visit_counts = df['visit'].value_counts().sort_index()

# 添加treatment分布图

fig.add_trace(

go.Bar(x=treatment_counts.index, y=treatment_counts.values,

name="Treatment", marker_color=px.colors.qualitative.Set1[0]),

row=1, col=1

)

# 添加exposure分布图

fig.add_trace(

go.Bar(x=exposure_counts.index, y=exposure_counts.values,

name="Exposure", marker_color=px.colors.qualitative.Set1[1]),

row=1, col=2

)

# 添加conversion分布图

fig.add_trace(

go.Bar(x=conversion_counts.index, y=conversion_counts.values,

name="Conversion", marker_color=px.colors.qualitative.Set1[2]),

row=2, col=1

)

# 添加visit分布图

fig.add_trace(

go.Bar(x=visit_counts.index, y=visit_counts.values,

name="Visit", marker_color=px.colors.qualitative.Set1[3]),

row=2, col=2

)

# 更新布局

fig.update_layout(

title_text="关键变量分布分析",

height=600,

showlegend=False,

template="plotly_white" # 使用白色网格背景,类似于seaborn的whitegrid

)

# 更新x轴和y轴标签

fig.update_xaxes(title_text="Value", row=1, col=1)

fig.update_xaxes(title_text="Value", row=1, col=2)

fig.update_xaxes(title_text="Value", row=2, col=1)

fig.update_xaxes(title_text="Value", row=2, col=2)

fig.update_yaxes(title_text="Count", row=1, col=1)

fig.update_yaxes(title_text="Count", row=1, col=2)

fig.update_yaxes(title_text="Count", row=2, col=1)

fig.update_yaxes(title_text="Count", row=2, col=2)

# 显示图形

fig.show()

# 打印具体比例

print(f"Treatment Group 比例: {df['treatment'].mean():.4f}")

print(f"实际曝光率 (Exposure): {df['exposure'].mean():.4f}")

print(f"整体转化率 (Conversion): {df['conversion'].mean():.4f}")

print(f"整体访问率 (Visit): {df['visit'].mean():.4f}")

Treatment Group 比例: 0.8494

- 实际曝光率 (Exposure): 0.0310

- 整体转化率 (Conversion): 0.0031

- 整体访问率 (Visit): 0.0473

初步ITT估计

进行初步ITT(Intention-To-Treat)分析是因果推断中至关重要的一步,其核心目的可以概括为:评估“策略或干预措施本身”的“真实世界”整体有效性,而不是在理想条件下该策略的“理论”有效性。它是一种从“上帝视角”或“决策者视角”进行的评估。

核心目的:估计“策略部署”的净效果

想象一下,公司决定对一部分用户投放一个新广告(处理组),另一部分不投放(对照组)。ITT分析回答的问题是:“决定给用户投放这个广告,最终为我们带来了多少额外的转化?”它衡量的是处理分配(Intention) 本身带来的宏观平均效果,而不是处理接收(Treatment Received)的效果。

为什么ITT估计如此重要和实用?

- 反映现实世界的真实影响。在现实世界中,任何策略的执行都不可能完美:

- 处理组中:有些用户可能根本没看到广告(广告位没加载、用户快速划走)。

- 对照组中:有些用户可能通过其他渠道接触到了类似广告。

- ITT包含了所有这些“不完美”的情况。它告诉你,当你决定实施这个策略时,最终能期望得到什么样的整体业务提升。这对于高层决策者(如CEO、产品总监)至关重要,因为他们关心的是战略层面的投入产出比(ROI)。

- 避免“选择偏差”。如果我们只分析那些“真正看到广告的用户”和“没看到广告的用户”,就会引入巨大的偏差。因为“看到广告”这个行为本身就不是随机的——可能是更活跃的用户、更爱点击的用户才看到了广告。比较这两个群体,得出的效果会严重高估,因为用户本身的差异(活跃度)影响了结果。ITT通过严格遵守随机分组的原始分配,完美地保持了处理组和对照组之间的可比性,从而获得无偏的因果效应估计。

- 政策评估的黄金标准。在医学临床试验、经济学、社会科学和政策评估中,ITT被视为黄金标准。因为它回答了:“推出这项新政策/新药物,预计能对社会/病患群体产生多大的平均效益?”这比“在完美服药条件下药效有多好”这个问题的答案,对于公共卫生决策者来说更有价值。

ITT的局限性及后续步骤

虽然ITT目的明确且非常重要,但它也有其局限性:

- 效果“稀释”:由于处理组中有人没接受处理,对照组中有人意外接受了处理,ITT估计值通常会是真实处理效应的一个“稀释”后的版本(即绝对值更小)。它衡量的是策略执行的有效性,而不是策略本身的理论最大有效性。

- 无法回答异质性性问题:ITT给出的是一个平均效果。它无法告诉我们“广告对哪类用户最有效?”这类问题。

import pandas as pd

import numpy as np

import plotly.express as px

from statsmodels.formula.api import ols

import warnings

warnings.filterwarnings('ignore')

# 加载数据(这里假设已经下载了Criteo数据集)

df = pd.read_csv("data/criteo-data.csv")

numeric_features = [f'f{i}' for i in range(12)]

# 初步ITT分析

# 计算处理组和对照组的转化率

conversion_rates = df.groupby('treatment')['conversion'].agg(['mean', 'count', 'std'])

conversion_rates.columns = ['conversion_rate', 'count', 'std']

print("\n处理组和对照组的转化率:")

print(conversion_rates)

# 计算ITT估计值

treatment_conversion = conversion_rates.loc[1, 'conversion_rate']

control_conversion = conversion_rates.loc[0, 'conversion_rate']

itt_estimate = treatment_conversion - control_conversion

print(f"\nITT估计值: {itt_estimate:.6f}")

# 统计显著性检验

# 使用t检验评估ITT的统计显著性

from scipy import stats

treatment_group = df[df['treatment'] == 1]['conversion']

control_group = df[df['treatment'] == 0]['conversion']

t_stat, p_value = stats.ttest_ind(treatment_group, control_group, equal_var=False)

print(f"t统计量: {t_stat:.4f}")

print(f"p值: {p_value:.6f}")

# 计算置信区间

def calculate_ci(mean1, mean2, std1, std2, n1, n2, alpha=0.95):

"""计算两组均值差异的置信区间"""

mean_diff = mean1 - mean2

std_err = np.sqrt((std1**2 / n1) + (std2**2 / n2))

margin_error = stats.t.ppf((1 + alpha) / 2, min(n1, n2) - 1) * std_err

ci_lower = mean_diff - margin_error

ci_upper = mean_diff + margin_error

return ci_lower, ci_upper

ci_lower, ci_upper = calculate_ci(

treatment_conversion, control_conversion,

conversion_rates.loc[1, 'std'], conversion_rates.loc[0, 'std'],

conversion_rates.loc[1, 'count'], conversion_rates.loc[0, 'count']

)

print(f"95%置信区间: [{ci_lower:.6f}, {ci_upper:.6f}]")

# 可视化ITT结果

# 创建处理组和对照组转化率的柱状图

fig = px.bar(x=['处理组', '对照组'],

y=[treatment_conversion, control_conversion],

title='处理组和对照组的转化率比较',

labels={'x': '组别', 'y': '转化率'},

text=[f'{treatment_conversion:.4f}', f'{control_conversion:.4f}'])

fig.update_traces(texttemplate='%{text:.4f}', textposition='outside')

fig.add_annotation(x=0.5, y=max(treatment_conversion, control_conversion) + 0.01,

text=f"ITT估计值: {itt_estimate:.6f}<br>p值: {p_value:.6f}",

showarrow=False)

fig.show()

# 按特征分组的ITT分析

# 选择几个重要特征进行分析(这里假设f0-f3是重要特征)

features_to_analyze = ['f0', 'f1', 'f2', 'f3']

for feature in features_to_analyze:

# 将连续特征分箱

if df[feature].nunique() > 10:

# 使用标签而不是Interval对象

df[f'{feature}_bin'] = pd.qcut(df[feature], q=5, duplicates='drop', labels=False)

group_var = f'{feature}_bin'

# 为每个分箱创建有意义的标签

bins = pd.qcut(df[feature], q=5, duplicates='drop')

bin_labels = [f'分箱 {i+1}: {bins.cat.categories[i]}' for i in range(len(bins.cat.categories))]

# 将数值标签映射为有意义的字符串标签

df[f'{feature}_bin_str'] = df[group_var].map(lambda x: bin_labels[x] if not pd.isna(x) else '缺失值')

group_var = f'{feature}_bin_str'

else:

# 对于类别特征,直接使用原始值

group_var = feature

df[group_var] = df[group_var].astype(str)

# 计算每个分组的ITT

grouped_itt = df.groupby(group_var).apply(

lambda x: pd.Series({

'treatment_rate': x['treatment'].mean(),

'conversion_rate': x['conversion'].mean(),

'itt_estimate': x[x['treatment'] == 1]['conversion'].mean() -

x[x['treatment'] == 0]['conversion'].mean(),

'count': len(x)

})

).reset_index()

# 可视化按特征分组的ITT

fig = px.bar(grouped_itt, x=group_var, y='itt_estimate',

title=f'按 {feature} 分组的ITT估计',

labels={group_var: feature, 'itt_estimate': 'ITT估计值'})

fig.add_hline(y=itt_estimate, line_dash="dash", line_color="red",

annotation_text="总体ITT")

fig.update_layout(xaxis_tickangle=-45)

fig.show()

# 6. 使用线性回归进行ITT估计(考虑协变量)

# 这种方法可以控制其他变量的影响,得到更精确的ITT估计

formula = 'conversion ~ treatment + ' + ' + '.join(numeric_features)

model = ols(formula, data=df).fit()

print("\n线性回归结果 (控制协变量):")

print(model.summary())

# 提取处理效应的估计值和置信区间

treatment_effect = model.params['treatment']

treatment_ci_lower = model.conf_int().loc['treatment', 0]

treatment_ci_upper = model.conf_int().loc['treatment', 1]

print(f"\n控制协变量后的处理效应: {treatment_effect:.6f}")

print(f"95%置信区间: [{treatment_ci_lower:.6f}, {treatment_ci_upper:.6f}]")

# 7. 异质性处理效应探索

# 检查处理效应是否在不同特征水平上存在差异

interaction_results = []

for feature in features_to_analyze:

formula = f'conversion ~ treatment * {feature}'

model = ols(formula, data=df).fit()

interaction_effect = model.params[f'treatment:{feature}']

interaction_pvalue = model.pvalues[f'treatment:{feature}']

interaction_results.append({

'feature': feature,

'interaction_effect': interaction_effect,

'p_value': interaction_pvalue

})

interaction_df = pd.DataFrame(interaction_results)

print("\n处理效应异质性分析 (交互项):")

print(interaction_df)

# 可视化交互效应

fig = px.bar(interaction_df, x='feature', y='interaction_effect',

title='处理效应的异质性分析',

labels={'feature': '特征', 'interaction_effect': '交互效应'},

color='p_value',

color_continuous_scale='viridis_r')

fig.add_hline(y=0, line_dash="dash", line_color="red")

fig.show()

# 8. 敏感性分析

# 检查ITT估计对模型设定的敏感性

# 使用不同的模型设定重新估计ITT

models = {

'简单均值差异': itt_estimate,

'控制所有协变量': treatment_effect,

}

# 添加只控制部分协变量的模型

for feature in features_to_analyze[:2]:

formula = f'conversion ~ treatment + {feature}'

model = ols(formula, data=df).fit()

models[f'控制{feature}'] = model.params['treatment']

# 可视化不同模型的ITT估计

sensitivity_df = pd.DataFrame.from_dict(models, orient='index', columns=['ITT估计']).reset_index()

sensitivity_df.columns = ['模型', 'ITT估计']

fig = px.bar(sensitivity_df, x='模型', y='ITT估计',

title='ITT估计的敏感性分析',

labels={'模型': '模型设定', 'ITT估计': 'ITT估计值'})

fig.update_layout(xaxis_tickangle=-45)

fig.show()

# 9. 报告主要发现

print("\n" + "="*50)

print("ITT估计主要发现:")

print("="*50)

print(f"1. 初步ITT估计值: {itt_estimate:.6f}")

print(f"2. 统计显著性: {'显著' if p_value < 0.05 else '不显著'} (p值: {p_value:.6f})")

print(f"3. 95%置信区间: [{ci_lower:.6f}, {ci_upper:.6f}]")

print(f"4. 控制协变量后的ITT估计: {treatment_effect:.6f}")

print(f"5. 处理效应可能存在的异质性: {len(interaction_df[interaction_df['p_value'] < 0.05])} 个特征显示显著交互效应")

# 计算提升百分比

if control_conversion > 0:

lift_percentage = (itt_estimate / control_conversion) * 100

print(f"6. 相对于对照组的提升: {lift_percentage:.2f}%")

else:

print("6. 对照组转化率为零,无法计算提升百分比")

print("="*50)

# 10. 保存结果到文件

results = {

'itt_estimate': itt_estimate,

'p_value': p_value,

'ci_lower': ci_lower,

'ci_upper': ci_upper,

'treatment_effect_with_covariates': treatment_effect,

'treatment_ci_lower': treatment_ci_lower,

'treatment_ci_upper': treatment_ci_upper

}

results_df = pd.DataFrame.from_dict(results, orient='index', columns=['值'])

results_df.to_csv('itt_estimation_results.csv')

print("结果已保存到 itt_estimation_results.csv")

处理组和对照组的转化率:

conversion_rate count std

treatment

0 0.001615 21048 0.040160

1 0.003394 118748 0.058157

ITT估计值: 0.001778

t统计量: 5.4854

p值: 0.000000

95%置信区间: [0.001143, 0.002414]

线性回归结果 (控制协变量):

OLS Regression Results

==============================================================================

Dep. Variable: conversion R-squared: 0.116

Model: OLS Adj. R-squared: 0.115

Method: Least Squares F-statistic: 1405.

Date: Thu, 16 Oct 2025 Prob (F-statistic): 0.00

Time: 10:23:23 Log-Likelihood: 2.1361e+05

No. Observations: 139796 AIC: -4.272e+05

Df Residuals: 139782 BIC: -4.271e+05

Df Model: 13

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

Intercept -0.1903 0.035 -5.438 0.000 -0.259 -0.122

treatment 0.0013 0.000 3.421 0.001 0.001 0.002

f0 -8.136e-05 3.92e-05 -2.073 0.038 -0.000 -4.45e-06

f1 -0.0079 0.002 -4.007 0.000 -0.012 -0.004

f2 -0.0017 0.001 -2.439 0.015 -0.003 -0.000

f3 -0.0011 0.000 -7.172 0.000 -0.001 -0.001

f4 0.0464 0.001 55.121 0.000 0.045 0.048

f5 -0.0054 0.001 -7.499 0.000 -0.007 -0.004

f6 -0.0002 4.57e-05 -5.122 0.000 -0.000 -0.000

f7 -0.0010 0.000 -4.617 0.000 -0.001 -0.001

f8 -0.0019 0.005 -0.363 0.717 -0.012 0.008

f9 0.0002 3.92e-05 6.092 0.000 0.000 0.000

f10 -0.0376 0.001 -29.058 0.000 -0.040 -0.035

f11 -0.2551 0.010 -25.779 0.000 -0.274 -0.236

==============================================================================

Omnibus: 268075.996 Durbin-Watson: 2.003

Prob(Omnibus): 0.000 Jarque-Bera (JB): 441942011.489

Skew: 15.249 Prob(JB): 0.00

Kurtosis: 276.755 Cond. No. 8.19e+03

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 8.19e+03. This might indicate that there are

strong multicollinearity or other numerical problems.

控制协变量后的处理效应: 0.001343

95%置信区间: [0.000574, 0.002113]

处理效应异质性分析 (交互项):

feature interaction_effect p_value

0 f0 -0.000234 2.582618e-03

1 f1 0.026944 1.645609e-09

2 f2 -0.001037 4.564514e-01

3 f3 -0.002834 2.522617e-17

================================================== ITT估计主要发现: ================================================== 1. 初步ITT估计值: 0.001778 2. 统计显著性: 显著 (p值: 0.000000) 3. 95%置信区间: [0.001143, 0.002414] 4. 控制协变量后的ITT估计: 0.001343 5. 处理效应可能存在的异质性: 3 个特征显示显著交互效应 6. 相对于对照组的提升: 110.09% ================================================== 结果已保存到 itt_estimation_results.csv

“非遵从”分析

什么是“非遵从”现象?

核心定义:在随机实验或准实验中,个体被分配到一个处理组或对照组,但并未完全遵循分配方案,导致其实际接受的处理状态与初始分配不一致。

典型场景:

- 广告实验:用户被分配到“看广告”的处理组,但因未打开App、广告加载失败等原因,并未实际曝光广告。

- 优惠券实验:用户被分配到“发送优惠券”组,但可能因未查看邮件/短信而未使用优惠券。

关键变量:

- treatment(处理分配):随机分配的组别(0=对照组,1=处理组)。这是工具变量。

- exposure(实际曝光):个体实际接受的处理状态(0=未曝光,1=曝光)。这是内生处理变量。

- conversion(转化):我们关心的结果变量(如购买、点击)。

为什么要分析“非遵从”?其危害是什么?

忽略“非遵从”会导致严重的因果推断错误:

- 选择偏差:实际曝光广告的用户(exposure=1)可能本身就是更活跃、转化意愿更高的用户。直接比较 exposure=1和 exposure=0的人群的转化率,会混淆“广告效应”和“用户自身特性”,高估广告效果。

- 低估策略效果:单纯比较处理组和对照组(Intent-To-Treat, ITT分析)衡量的是“分配策略”的整体效果。如果非遵从率很高(如4%的用户未曝光),ITT效应会严重稀释“广告曝光”的真实因果效应。

分析的根本目的:得到对处理(如广告曝光)的无偏的因果效应估计。

如何诊断“非遵从”?—— 关键诊断步骤

首先必须量化非遵从的严重程度。

import pandas as pd

import numpy as np

# 1. 创建交叉表,查看 treatment 和 exposure 的一致性

cross_tab = pd.crosstab(data['treatment'], data['exposure'], margins=True)

cross_tab_perc = pd.crosstab(data['treatment'], data['exposure'], normalize='index') # 按行计算百分比

print("Treatment vs Exposure 交叉表(计数):")

print(cross_tab)

print("\nTreatment vs Exposure 交叉表(行比例):")

print(np.round(cross_tab_perc * 100, 2))

# 2. 计算关键的非遵从比例

# - 处理组中未曝光的人 (令人遗憾的非遵从)

treatment_group_no_exposure = cross_tab.loc[1, 0] / cross_tab.loc[1, 'All']

# - 对照组中曝光的人 (令人讨厌的非遵从)

control_group_with_exposure = cross_tab.loc[0, 1] / cross_tab.loc[0, 'All']

print(f"\n关键非遵从比例:")

print(f"- 在处理组中,有 {treatment_group_no_exposure*100:.2f}% 的人未能成功曝光广告。")

print(f"- 在对照组中,有 {control_group_with_exposure*100:.2f}% 的人意外曝光了广告。")

Treatment vs Exposure 交叉表(计数): exposure 0 1 All treatment 0 21048 0 21048 1 114411 4337 118748 All 135459 4337 139796 Treatment vs Exposure 交叉表(行比例): exposure 0 1 treatment 0 100.00 0.00 1 96.35 3.65

关键非遵从比例:

- 在处理组中,有 96.35% 的人未能成功曝光广告。

- 在对照组中,有 0.00% 的人意外曝光了广告。

诊断结果解读(以Criteo数据集为例):

- 对照组纯净度:对照组中00% 的人曝光广告,说明实验执行的一半是完美的。

- 处理组非遵从严重:处理组中高达40% 的人未曝光,说明广告投放的“送达率”极低。

- 实验性质转变:这不再是一个标准的A/B测试,而变成一个鼓励型设计。treatment不是一个强制命令,而是一个“机会”或“鼓励”。

如何解决“非遵从”?—— 工具变量法

当存在“非遵从”时,直接比较 exposure会导致选择偏差,而简单的 ITT分析又会稀释效应。工具变量法是解决这一难题、估计无偏因果效应的标准方法。

工具变量法的核心直觉是:寻找一个“外生的冲击”作为代理,这个冲击只通过影响我们关心的处理变量来影响结果。

一个经典的比喻:想象我们想研究“参军”对“未来收入”的影响。直接比较参军和未参军的人的收入会有偏差(因为参军的人可能来自不同社会经济背景)。但我们发现,越战时期美国使用了“征兵抽签”(Draft Lottery)号码随机决定谁被征召。这是一个绝佳的工具变量。

- 相关性:抽签号码靠前的人,参军率更高。

- 排他性约束:抽签号码本身是随机的,它不会直接影响一个人的收入能力(除了通过影响参军这个行为之外)。

于是,我们可以比较抽签号码靠前和靠后的人的收入差异,并将其归因于参军的影响。工具变量法做的就是这件事。

在案例中:

- 工具变量 (Z):treatment(随机分配)

- 内生处理变量 (D):exposure(实际曝光)

- 结果变量 (Y):conversion(转化)

工具变量必须满足两个核心假设,缺一不可:

- 相关性 (Relevance):工具变量 Z必须与内生变量 D相关。验证方法:第一阶段回归。我们运行 exposure ~ treatment的回归。

- 排他性约束 (Exclusion Restriction):工具变量 Z只能通过影响内生变量 D来影响结果 Y,不能有其他直接或间接的路径。这个假设无法被数据直接检验,必须基于逻辑和实验设计进行论证。

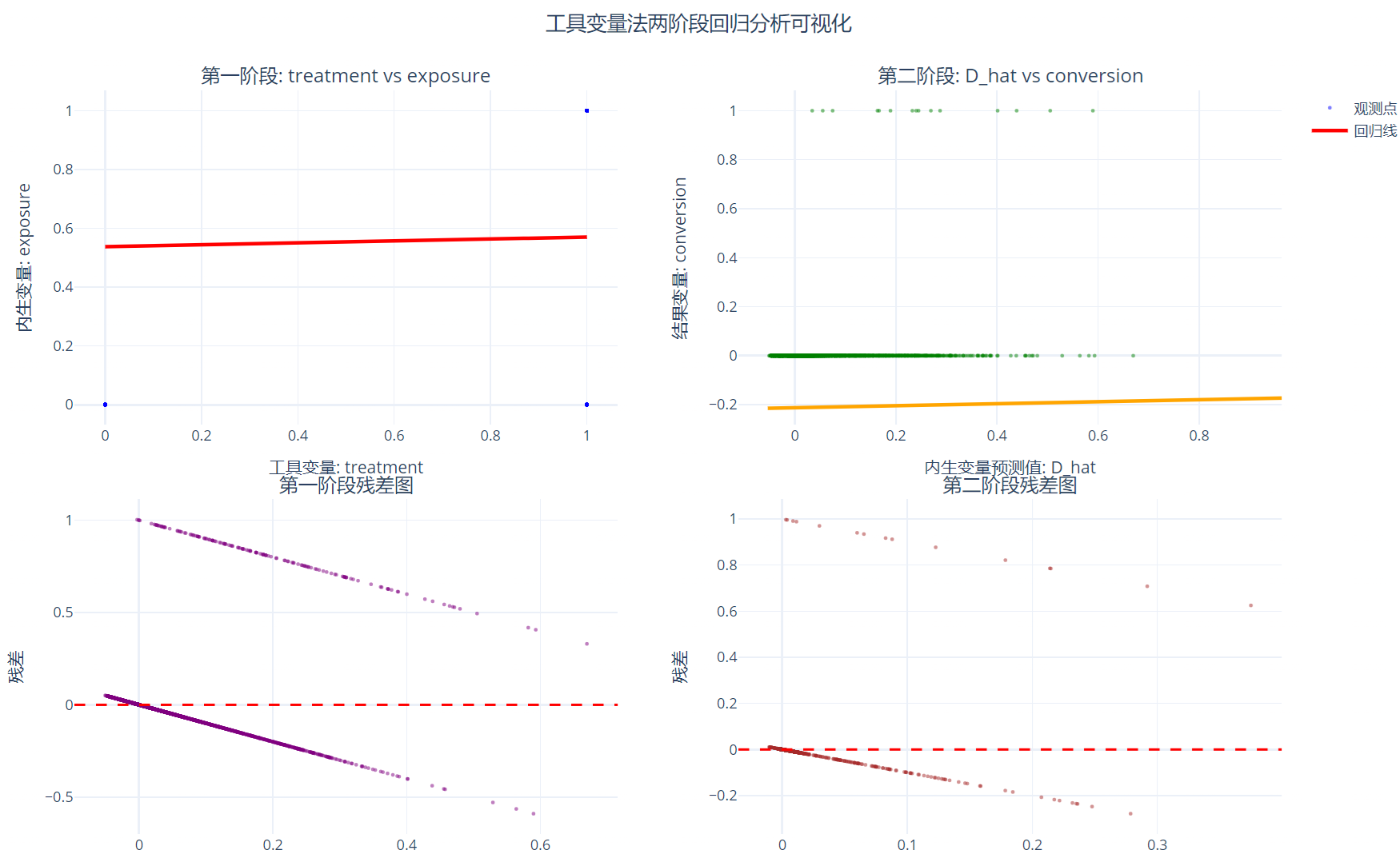

模型实现:两阶段最小二乘法 (2SLS)

工具变量法最常用的实现方式是两阶段最小二乘法,它直观地体现了“剥离内生性”的思想。

第一阶段 (First Stage):

用工具变量 Z(treatment) 和所有控制变量 X来回归内生变量 D(exposure)。

exposure = α + β * treatment + γ * X + ε

我们从这一步得到 exposure的预测值 exposure_hat。这个预测值只包含了由随机分配 treatment所解释的那部分变异,它已经“剥离”了与误差项相关的内生部分。

第二阶段 (Second Stage):

用第一阶段得到的、纯净的 exposure_hat去回归结果变量 Y(conversion)。

conversion = λ + δ * exposure_hat + θ * X + υ

这里的系数 δ就是我们最终想要的局部平均处理效应 (LATE) 的无偏估计。

import pandas as pd

import numpy as np

import statsmodels.api as sm

from sklearn.linear_model import LinearRegression

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import plotly.express as px

# 加载数据(这里假设已经下载了Criteo数据集)

df = pd.read_csv("data/criteo-data.csv")

control_vars = ['f0', 'f1', 'f2', 'f3', 'f4', 'f5', 'f6', 'f7', 'f8', 'f9', 'f10', 'f11']

instrument_var = 'treatment'

endogenous_var = 'exposure'

outcome_var = 'conversion'

# 手动实现两阶段最小二乘法 (2SLS) - 使用Plotly可视化

print("=" * 60)

print("工具变量法 - 两阶段最小二乘法 (2SLS) 手动实现 (Plotly版本)")

print("=" * 60)

# 准备数据

X = df[control_vars]

Z = df[instrument_var] # 工具变量

D = df[endogenous_var] # 内生变量

Y = df[outcome_var] # 结果变量

# 添加常数项

X_with_const = sm.add_constant(X)

# ==================== 第一阶段回归 ====================

print("\n" + "="*40)

print("第一阶段回归: 工具变量(Z) → 内生变量(D)")

print("="*40)

# 构建第一阶段回归的数据:用工具变量和控制变量预测内生变量

first_stage_data = pd.concat([Z, X], axis=1)

first_stage_data_with_const = sm.add_constant(first_stage_data)

# 第一阶段回归

first_stage_model = sm.OLS(D, first_stage_data_with_const)

first_stage_results = first_stage_model.fit()

print("第一阶段回归结果:")

print(first_stage_results.summary())

# 获取第一阶段的预测值 D_hat

D_hat = first_stage_results.fittedvalues

# 计算第一阶段的关键统计量

first_stage_f_statistic = (first_stage_results.rsquared / (1 - first_stage_results.rsquared)) * \

(first_stage_results.df_model / first_stage_results.df_resid)

print(f"\n第一阶段关键统计量:")

print(f"- R-squared: {first_stage_results.rsquared:.6f}")

print(f"- F统计量: {first_stage_f_statistic:.2f}")

print(f"- 工具变量系数: {first_stage_results.params[instrument_var]:.6f}")

print(f"- 工具变量系数p值: {first_stage_results.pvalues[instrument_var]:.6f}")

# 弱工具变量检验

if first_stage_f_statistic > 10:

print("✓ 工具变量强度: 充足 (F > 10)")

else:

print("⚠ 警告: 工具变量可能较弱 (F < 10)")

============================================================

工具变量法 - 两阶段最小二乘法 (2SLS) 手动实现 (Plotly版本)

============================================================

========================================

第一阶段回归: 工具变量(Z) → 内生变量(D)

========================================

第一阶段回归结果:

OLS Regression Results

==============================================================================

Dep. Variable: exposure R-squared: 0.174

Model: OLS Adj. R-squared: 0.174

Method: Least Squares F-statistic: 2266.

Date: Thu, 16 Oct 2025 Prob (F-statistic): 0.00

Time: 18:55:08 Log-Likelihood: 59962.

No. Observations: 139796 AIC: -1.199e+05

Df Residuals: 139782 BIC: -1.198e+05

Df Model: 13

Covariance Type: nonrobust

==============================================================================

coef std err t P>|t| [0.025 0.975]

------------------------------------------------------------------------------

const 0.5374 0.105 5.117 0.000 0.332 0.743

treatment 0.0328 0.001 27.868 0.000 0.031 0.035

f0 -0.0020 0.000 -17.033 0.000 -0.002 -0.002

f1 0.0200 0.006 3.374 0.001 0.008 0.032

f2 -0.0103 0.002 -4.830 0.000 -0.014 -0.006

f3 -0.0301 0.000 -63.745 0.000 -0.031 -0.029

f4 0.0197 0.003 7.790 0.000 0.015 0.025

f5 -0.0353 0.002 -16.298 0.000 -0.040 -0.031

f6 -0.0044 0.000 -31.826 0.000 -0.005 -0.004

f7 -0.0027 0.001 -4.314 0.000 -0.004 -0.001

f8 -0.1313 0.016 -8.377 0.000 -0.162 -0.101

f9 0.0010 0.000 8.275 0.000 0.001 0.001

f10 -0.0159 0.004 -4.095 0.000 -0.024 -0.008

f11 -0.2165 0.030 -7.289 0.000 -0.275 -0.158

==============================================================================

Omnibus: 119936.057 Durbin-Watson: 2.007

Prob(Omnibus): 0.000 Jarque-Bera (JB): 3182819.270

Skew: 4.183 Prob(JB): 0.00

Kurtosis: 24.828 Cond. No. 8.19e+03

==============================================================================

Notes:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 8.19e+03. This might indicate that there are

strong multicollinearity or other numerical problems.

第一阶段关键统计量:

- R-squared: 0.174044

- F统计量: 0.00

- 工具变量系数: 0.032849

- 工具变量系数p值: 0.000000

⚠ 警告: 工具变量可能较弱 (F < 10)

# ==================== 第二阶段回归 ====================

print("\n" + "="*40)

print("第二阶段回归: 内生变量预测值(D_hat) → 结果变量(Y)")

print("="*40)

# 构建第二阶段回归的数据:用D_hat和控制变量预测结果变量

second_stage_data = pd.concat([pd.Series(D_hat, name='D_hat', index=X.index), X], axis=1)

second_stage_data_with_const = sm.add_constant(second_stage_data)

# 第二阶段回归

second_stage_model = sm.OLS(Y, second_stage_data_with_const)

second_stage_results = second_stage_model.fit(cov_type='HC1') # 使用异方差稳健标准误

print("第二阶段回归结果:")

print(second_stage_results.summary())

# 获取LATE估计值

late_estimate = second_stage_results.params['D_hat']

late_se = second_stage_results.bse['D_hat']

# 计算置信区间

ci_lower = late_estimate - 1.96 * late_se

ci_upper = late_estimate + 1.96 * late_se

print(f"\n局部平均处理效应 (LATE) 估计:")

print(f"- LATE估计值: {late_estimate:.6f}")

print(f"- 标准误: {late_se:.6f}")

print(f"- 95% 置信区间: [{ci_lower:.6f}, {ci_upper:.6f}]")

print(f"- t统计量: {late_estimate/late_se:.2f}")

print(f"- p值: {second_stage_results.pvalues['D_hat']:.6f}")

========================================

第二阶段回归: 内生变量预测值(D_hat) → 结果变量(Y)

========================================

第二阶段回归结果:

OLS Regression Results

==============================================================================

Dep. Variable: conversion R-squared: 0.116

Model: OLS Adj. R-squared: 0.115

Method: Least Squares F-statistic: 36.04

Date: Thu, 16 Oct 2025 Prob (F-statistic): 1.02e-91

Time: 18:56:23 Log-Likelihood: 2.1361e+05

No. Observations: 139796 AIC: -4.272e+05

Df Residuals: 139782 BIC: -4.271e+05

Df Model: 13

Covariance Type: HC1

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const -0.2123 0.083 -2.545 0.011 -0.376 -0.049

D_hat 0.0409 0.009 4.372 0.000 0.023 0.059

f0 6.837e-07 4.44e-05 0.015 0.988 -8.63e-05 8.77e-05

f1 -0.0087 0.006 -1.351 0.177 -0.021 0.004

f2 -0.0013 0.001 -1.950 0.051 -0.003 6.99e-06

f3 0.0001 0.000 0.247 0.805 -0.001 0.001

f4 0.0456 0.005 8.723 0.000 0.035 0.056

f5 -0.0040 0.003 -1.564 0.118 -0.009 0.001

f6 -5.556e-05 6.61e-05 -0.841 0.400 -0.000 7.39e-05

f7 -0.0008 0.001 -1.426 0.154 -0.002 0.000

f8 0.0035 0.006 0.536 0.592 -0.009 0.016

f9 0.0002 6.78e-05 2.937 0.003 6.63e-05 0.000

f10 -0.0370 0.006 -6.426 0.000 -0.048 -0.026

f11 -0.2462 0.063 -3.912 0.000 -0.370 -0.123

==============================================================================

Omnibus: 268075.996 Durbin-Watson: 2.003

Prob(Omnibus): 0.000 Jarque-Bera (JB): 441942011.489

Skew: 15.249 Prob(JB): 0.00

Kurtosis: 276.755 Cond. No. 8.37e+03

==============================================================================

Notes:

[1] Standard Errors are heteroscedasticity robust (HC1)

[2] The condition number is large, 8.37e+03. This might indicate that there are

strong multicollinearity or other numerical problems.

局部平均处理效应 (LATE) 估计:

- LATE估计值: 0.040894

- 标准误: 0.009354

- 95% 置信区间: [0.022560, 0.059228]

- t统计量: 4.37

- p值: 0.000012

# ==================== Plotly可视化分析 ====================

print("\n" + "="*40)

print("Plotly交互式可视化分析")

print("="*40)

# 创建子图

fig = make_subplots(

rows=2, cols=2,

subplot_titles=[

f'第一阶段: {instrument_var} vs {endogenous_var}',

f'第二阶段: D_hat vs {outcome_var}',

'第一阶段残差图',

'第二阶段残差图'

],

vertical_spacing=0.1,

horizontal_spacing=0.1

)

# 对大样本数据进行采样以提高性能

sample_size = min(5000, len(Z))

if len(Z) > sample_size:

sample_indices = np.random.choice(len(Z), sample_size, replace=False)

Z_sample = Z.iloc[sample_indices]

D_sample = D.iloc[sample_indices]

D_hat_sample = D_hat.iloc[sample_indices]

Y_sample = Y.iloc[sample_indices]

first_stage_resid_sample = first_stage_results.resid.iloc[sample_indices]

second_stage_resid_sample = second_stage_results.resid.iloc[sample_indices]

first_stage_fitted_sample = first_stage_results.fittedvalues.iloc[sample_indices]

second_stage_fitted_sample = second_stage_results.fittedvalues.iloc[sample_indices]

else:

Z_sample = Z

D_sample = D

D_hat_sample = D_hat

Y_sample = Y

first_stage_resid_sample = first_stage_results.resid

second_stage_resid_sample = second_stage_results.resid

first_stage_fitted_sample = first_stage_results.fittedvalues

second_stage_fitted_sample = second_stage_results.fittedvalues

# 1. 第一阶段散点图

fig.add_trace(

go.Scatter(

x=Z_sample, y=D_sample,

mode='markers',

marker=dict(size=3, opacity=0.5, color='blue'),

name='观测点',

hovertemplate=f'{instrument_var}: %{{x}}<br>{endogenous_var}: %{{y}}<extra></extra>'

),

row=1, col=1

)

# 添加回归线

z_range = np.linspace(Z.min(), Z.max(), 100)

coef = first_stage_results.params[instrument_var]

const = first_stage_results.params['const']

regression_line = const + coef * z_range

fig.add_trace(

go.Scatter(

x=z_range, y=regression_line,

mode='lines',

line=dict(color='red', width=3),

name='回归线',

hovertemplate=f'{instrument_var}: %{{x}}<br>预测{endogenous_var}: %{{y:.3f}}<extra></extra>'

),

row=1, col=1

)

# 2. 第二阶段散点图

fig.add_trace(

go.Scatter(

x=D_hat_sample, y=Y_sample,

mode='markers',

marker=dict(size=3, opacity=0.5, color='green'),

name='观测点',

hovertemplate='D_hat: %{x:.3f}<br>%{y}: %{y}<extra></extra>',

showlegend=False

),

row=1, col=2

)

# 添加回归线

d_hat_range = np.linspace(D_hat.min(), D_hat.max(), 100)

coef_2nd = second_stage_results.params['D_hat']

const_2nd = second_stage_results.params['const']

regression_line_2nd = const_2nd + coef_2nd * d_hat_range

fig.add_trace(

go.Scatter(

x=d_hat_range, y=regression_line_2nd,

mode='lines',

line=dict(color='orange', width=3),

name='回归线',

hovertemplate='D_hat: %{x:.3f}<br>预测%{y}: %{y:.3f}<extra></extra>',

showlegend=False

),

row=1, col=2

)

# 3. 第一阶段残差图

fig.add_trace(

go.Scatter(

x=first_stage_fitted_sample, y=first_stage_resid_sample,

mode='markers',

marker=dict(size=3, opacity=0.5, color='purple'),

name='残差',

hovertemplate='预测值: %{x:.3f}<br>残差: %{y:.3f}<extra></extra>',

showlegend=False

),

row=2, col=1

)

# 添加零参考线

fig.add_hline(y=0, line_dash="dash", line_color="red", row=2, col=1)

# 4. 第二阶段残差图

fig.add_trace(

go.Scatter(

x=second_stage_fitted_sample, y=second_stage_resid_sample,

mode='markers',

marker=dict(size=3, opacity=0.5, color='brown'),

name='残差',

hovertemplate='预测值: %{x:.3f}<br>残差: %{y:.3f}<extra></extra>',

showlegend=False

),

row=2, col=2

)

# 添加零参考线

fig.add_hline(y=0, line_dash="dash", line_color="red", row=2, col=2)

# 更新布局

fig.update_layout(

title_text="工具变量法两阶段回归分析可视化",

title_x=0.5,

height=800,

width=1200,

showlegend=True,

template="plotly_white"

)

# 更新坐标轴标签

fig.update_xaxes(title_text=f"工具变量: {instrument_var}", row=1, col=1)

fig.update_yaxes(title_text=f"内生变量: {endogenous_var}", row=1, col=1)

fig.update_xaxes(title_text="内生变量预测值: D_hat", row=1, col=2)

fig.update_yaxes(title_text=f"结果变量: {outcome_var}", row=1, col=2)

fig.update_xaxes(title_text="预测值", row=2, col=1)

fig.update_yaxes(title_text="残差", row=2, col=1)

fig.update_xaxes(title_text="预测值", row=2, col=2)

fig.update_yaxes(title_text="残差", row=2, col=2)

fig.show()

# ==================== 创建结果汇总仪表板 ====================

# 创建结果汇总表格

results_summary = pd.DataFrame({

'指标': [

'第一阶段R²', '第一阶段F统计量', '工具变量系数',

'LATE估计值', 'LATE标准误', '95%置信区间下限', '95%置信区间上限'

],

'数值': [

first_stage_results.rsquared,

first_stage_f_statistic,

first_stage_results.params[instrument_var],

late_estimate,

late_se,

ci_lower,

ci_upper

],

'解读': [

'工具变量解释内生变量的程度',

'工具变量强度检验(F>10为强工具变量)',

'工具变量对内生变量的影响大小',

'广告曝光对转化的因果效应',

'效应估计的不确定性',

'效应估计的下界',

'效应估计的上界'

]

})

# 创建结果表格图

# 创建结果表格图 - 完整样式控制版本

results_table = go.Figure(data=[go.Table(

header=dict(

values=['<b>指标</b>', '<b>数值</b>', '<b>解读</b>'], # 使用HTML标签加粗

fill_color='#2E86AB', # 更专业的蓝色

align=['left', 'center', 'left'], # 每列不同的对齐方式

font=dict(

color='white', # 白色字体

size=14, # 字体大小

family="Arial" # 字体家族

),

height=40, # 表头高度

line=dict(color='#1A5276', width=2) # 边框颜色和宽度

),

cells=dict(

values=[results_summary['指标'],

results_summary['数值'].round(6),

results_summary['解读']],

fill_color=[

'#F8F9FA', # 第一列背景色 - 浅灰色

'#E8F4F8', # 第二列背景色 - 更浅的蓝色

'#F0F7FA' # 第三列背景色 - 非常浅的蓝色

],

align=['left', 'center', 'left'], # 单元格对齐方式

font=dict(

color=['#2C3E50', '#2980B9', '#34495E'], # 每列不同的字体颜色

size=12,

family="Arial"

),

height=35, # 单元格高度

line=dict(color='#BDC3C7', width=1) # 单元格边框

)

)])

# 更新表格布局样式

results_table.update_layout(

title=dict(

text='<b>工具变量分析结果汇总</b>', # 使用HTML标签

x=0.5, # 标题居中

font=dict(size=18, family="Arial", color="#2C3E50")

),

width=1000, # 表格宽度

height=400, # 表格高度

margin=dict(l=20, r=20, t=60, b=20), # 边距控制

paper_bgcolor='white', # 背景颜色

plot_bgcolor='white'

)

# 添加表格边框和阴影效果

results_table.update_traces(

columnwidth=[0.25, 0.25, 0.5], # 控制每列宽度比例

cells=dict(

prefix=[None, None, None], # 前缀,如货币符号

suffix=[None, None, None], # 后缀,如单位

format=[None, None, None] # 数字格式

)

)

results_table.show()

# ==================== 创建效应可视化图 ====================

effect_fig = go.Figure()

# 添加点估计

effect_fig.add_trace(go.Scatter(

x=[late_estimate], y=['LATE估计'],

mode='markers',

marker=dict(size=15, color='blue'),

name='点估计',

error_x=dict(

type='data',

array=[late_se * 1.96],

thickness=5,

width=10

)

))

# 添加置信区间

effect_fig.add_trace(go.Scatter(

x=[ci_lower, ci_upper], y=['置信区间', '置信区间'],

mode='lines',

line=dict(width=10, color='gray'),

name='95%置信区间'

))

effect_fig.update_layout(

title='局部平均处理效应(LATE)估计',

xaxis_title='效应大小',

yaxis_title='',

showlegend=True,

width=800,

height=400,

template="plotly_white"

)

# 添加零线参考

effect_fig.add_vline(x=0, line_dash="dash", line_color="red")

effect_fig.show()

CausalML使用实例

特征工程

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler, KBinsDiscretizer

from sklearn.model_selection import train_test_split

import category_encoders as ce

data = pd.read_csv('data/criteo-data.csv')

# 设置参数

SAMPLE_FRAC = 0.3 # 采样比例,全量数据设为1.0

N_BINS = 10 # 分箱数量

TEST_SIZE = 0.2 # 测试集比例

RANDOM_STATE = 42 # 随机种子

# 步骤: 定义特征列

numerical_cols = [f'f{i}' for i in range(12)]

treatment_col = 'treatment'

target_cols = ['conversion', 'visit', 'exposure']

# 步骤1 处理缺失值

# 数值特征:用中位数填充,如果中位数是NaN则用0填充

for col in numerical_cols:

median_val = data[col].median()

# 如果中位数是NaN(即整个列都是缺失值),则用0填充

if pd.isna(median_val):

data[col] = data[col].fillna(0)

else:

data[col] = data[col].fillna(median_val)

# 步骤2: 数值特征处理

# 对数变换(减少偏度) - 确保值非负

for col in numerical_cols:

# 确保最小值大于等于0(对数变换要求)

min_val = data[col].min()

if min_val < 0:

# 如果最小值小于0,将所有值平移

data[col] = data[col] - min_val + 1e-6

data[col] = np.log1p(data[col])

# 标准化

scaler = StandardScaler()

data[numerical_cols] = scaler.fit_transform(data[numerical_cols])

# 分箱处理(创建新特征)

binner = KBinsDiscretizer(n_bins=N_BINS, encode='ordinal', strategy='quantile')

for col in numerical_cols:

bin_col_name = f'{col}_bin'

# 确保列中没有NaN值

if data[col].isna().any():

# 如果还有NaN,用0填充

data[col] = data[col].fillna(0)

# 确保列中没有无穷大值

if np.isinf(data[col]).any():

# 用最大值替换正无穷,最小值替换负无穷

max_val = data[col][~np.isinf(data[col])].max()

min_val = data[col][~np.isinf(data[col])].min()

data[col] = data[col].replace(np.inf, max_val)

data[col] = data[col].replace(-np.inf, min_val)

# 执行分箱

data[bin_col_name] = binner.fit_transform(data[[col]]).astype(int)

# 步骤3: 创建增量建模专用特征

# 实验组/对照组特征差异

for col in numerical_cols:

data[f'{col}_treat_diff'] = data[col] * data[treatment_col]

增量建模特征

data[f'{col}_treat_diff’] = data[col] * data[treatment_col]的作用

这个步骤是增量建模(Uplift Modeling)中非常关键的特征工程操作,它创建了原始特征与处理变量(treatment)的交互特征。以下是其详细作用和意义:捕捉异质性处理效应(Heterogeneous Treatment Effects)

- 核心目的:识别不同特征值的用户对处理(treatment)的不同响应

- 工作原理:通过将用户特征与处理变量相乘,创建能够反映”当用户具有特定特征值时,处理对其影响程度”的特征

- 数学表达:如果原始特征为X,处理变量为T(0或1),则新特征为X*T

为什么这在增量建模中至关重要?

在增量建模中,我们关心的不是简单的”用户是否会转化”,而是”处理(如广告)是否会导致用户转化”。这种特征交互能够:

- 区分自然转化和因果转化

- 自然转化:无论是否看到广告都会转化的用户

- 因果转化:因为看到广告才转化的用户

- 识别敏感用户群体:

- 帮助模型识别哪些特征的用户对处理最敏感(persuadable)

- 避免对不敏感用户(lost-cause或sure-thing)浪费资源

实际应用示例

假设我们有一个特征”用户活跃度”(activity_level):

- 用户A:activity_level = 0.8(高活跃用户)

- 用户B:activity_level = 0.2(低活跃用户)

创建交互特征后:

- 用户A(实验组):activity_level_treat_diff = 0.8 * 1 = 0.8

- 用户A(对照组):activity_level_treat_diff = 0.8 * 0 = 0

- 用户B(实验组):activity_level_treat_diff = 0.2 * 1 = 0.2

- 用户B(对照组):activity_level_treat_diff = 0.2 * 0 = 0

模型可以学习到:

- 高活跃用户(activity_level_treat_diff值高)对广告响应更好

- 低活跃用户(activity_level_treat_diff值低)对广告响应较差

在增量模型中的作用机制

当使用如S-Learner等增量模型时:

# S-Learner模型结构 conversion = model.predict([features, treatment_interaction_features])

- 模型可以学习特征与处理的交互效应

- 通过检查交互特征的系数/重要性,可以直接评估处理对不同特征用户的影响

与传统特征工程的区别

| 传统特征工程 | 增量建模特征工程 |

| 关注特征与目标的关系 | 关注特征与处理的交互效应 |

| 优化整体预测准确率 | 优化处理效应的估计 |

| 识别高转化用户 | 识别对处理敏感的用户 |

# 步骤4: 特征交叉

# 创建特征组合

data['f0_f1'] = data['f0'] * data['f1']

data['f2_div_f3'] = data['f2'] / (data['f3'] + 1e-6)

data['f4_f5'] = data['f4'] * data['f5']

data['f6_div_f7'] = data['f6'] / (data['f7'] + 1e-6)

# 步骤5: 创建统计特征

# 基于分箱的统计特征

for col in numerical_cols:

bin_col = f'{col}_bin'

# 分箱内均值

bin_mean = data.groupby(bin_col)[col].transform('mean')

data[f'{col}_bin_mean'] = bin_mean

# 分箱内标准差

bin_std = data.groupby(bin_col)[col].transform('std')

data[f'{col}_bin_std'] = bin_std.fillna(0)

# 步骤6: 创建交互特征

# 特征与treatment的交互

for col in numerical_cols[:6]: # 对前6个特征创建交互

data[f'{col}_treat_interaction'] = data[col] * data[treatment_col]

# 步骤7: 多项式特征

# 二次项

for col in numerical_cols[:5]:

data[f'{col}_squared'] = data[col] ** 2

特征处理策略

在特征工程中,选择合适的处理策略至关重要。

乘法交叉 (f0_f1, f4_f5)

作用:

- 捕捉特征间的协同效应

- 识别特征间的非线性关系

- 增强模型的表达能力

适用场景:

- 当两个特征在业务上存在关联关系时(如广告点击率 × 广告展示次数)

- 当特征可能具有乘积效应时(如用户活跃度 × 用户价值)

- 当特征分布相似且可能共同影响目标时

选择建议:

- 业务理解驱动:选择业务上存在潜在协同效应的特征对

- 相关性分析:选择相关性较高的特征对进行乘法交叉

- 特征重要性:基于初步模型的特征重要性选择重要特征进行交叉

- 领域知识:根据行业经验选择(如电商中价格 × 折扣率)

除法交叉 (f2_div_f3, f6_div_f7)

作用:

- 创建比例特征

- 捕捉特征间的相对关系

- 减少量纲影响

适用场景:

- 当特征间存在比例关系时(如点击率 = 点击/展示)

- 当需要标准化特征时(如人均消费 = 总消费/用户数)

- 当特征值范围差异大时

选择建议:

- 避免分母为零:添加小常数(如1e-6)防止除零错误

- 业务意义:选择有实际业务意义的比例组合

- 特征分布:选择分母特征分布较广的组合

- 相关性验证:计算交叉特征与目标的相关系数

分箱内均值 ({col}_bin_mean)

作用:

- 提供特征平滑表示

- 减少噪声影响

- 捕捉局部趋势

适用场景:

- 当特征分布不均匀时

- 当特征与目标关系非线性时

- 当需要减少过拟合风险时

选择建议:

- 高基数特征:优先处理取值多的特征

- 非线性关系:对与目标有非线性关系的特征使用

- 重要特征:对模型重要性高的特征添加

分箱内标准差 ({col}_bin_std)

作用:

- 衡量组内变异程度

- 捕捉特征值的稳定性

- 识别异常值模式

适用场景:

- 当特征在不同取值区间变异程度不同时

- 当需要识别异常模式时

- 在风险管理或异常检测场景

选择建议:

- 金融风控:在信用评分等场景特别有用

- 波动性特征:对波动大的特征优先使用

- 补充均值:与分箱内均值配合使用

特征与treatment交互 ({col}_treat_interaction)

- 作用:

- 捕捉处理效应的异质性

- 识别对处理敏感的用户群体

- 提升增量模型性能

- 选择建议:

- 关键特征优先:选择与业务目标最相关的特征

- 多样性覆盖:选择不同类型特征(如行为、人口统计)

- 模型反馈:基于初步模型选择重要特征

- 计算效率:限制交互特征数量(如前6个)

二次项 ({col}_squared)

作用:

- 捕捉非线性关系

- 识别U型或倒U型关系

- 增强模型表达能力

适用场景:

- 当特征与目标可能存在二次关系时

- 在存在阈值效应的情况下

- 当特征分布有偏时

选择建议:

- 偏态特征:对偏度高的特征优先使用

- 重要特征:选择模型重要性高的特征

- 业务理解:根据领域知识选择可能有非线性影响的特征

- 避免过度:限制多项式特征数量(如前5个)

因果效应特征

用户响应类型特征概述

在增量建模(Uplift Modeling)中,用户响应类型特征是最核心的因果效应特征之一。它基于用户是否接受处理(treatment)和是否转化(conversion)的组合,将用户分为四个关键群体:

Persuadable(可说服用户)

- 定义:接受处理(treatment=1)且转化(conversion=1)

- 特征含义:

- 广告/处理对这些用户产生了积极影响

- 如果没有处理,他们可能不会转化

- 业务价值:

- 营销活动的核心目标群体

- 增量效应的直接体现

- 处理策略:

- 优先投放广告

- 提供个性化优惠

- 加强用户互动

Lost-cause(无望用户)

- 定义:接受处理(treatment=1)但未转化(conversion=0)

- 特征含义:

- 广告/处理对这些用户无效

- 即使接受处理也不会转化

- 业务价值:

- 识别无效投放对象

- 避免资源浪费的关键

- 处理策略:

- 减少或停止广告投放

- 重新评估用户价值

- 考虑用户细分(如高价值但暂时不转化)

Sure-thing(必然转化用户)

- 定义:未接受处理(treatment=0)但转化(conversion=1)

- 特征含义:

- 这些用户无论如何都会转化

- 广告/处理对他们没有增量价值

- 业务价值:

- 识别忠诚用户群体

- 避免不必要的营销成本

- 处理策略:

- 减少广告投放频率

- 提供非促销性内容

- 专注于用户留存而非获取

Do-not-disturb(勿扰用户)

- 定义:未接受处理(treatment=0)且未转化(conversion=0)

- 特征含义:

- 这些用户可能对广告反感

- 处理可能产生负面效应

- 业务价值:

- 识别潜在反感用户

- 预防负面口碑传播

- 处理策略:

- 避免主动打扰

- 提供非侵入式互动

- 谨慎测试响应阈值

目标编码

目标编码(Target Encoding)是一种强大的特征工程技术,特别适用于处理分类变量。它通过使用目标变量的统计信息来编码分类特征,从而捕捉类别与目标变量之间的关系。在增量建模中,目标编码尤为重要,因为它可以帮助模型理解不同类别用户对广告处理的响应差异。

目标编码的核心思想是将分类变量的每个类别替换为该类别下目标变量的统计量(通常是均值)。例如:

- 对于用户所在城市特征:

- 城市A的用户平均转化率为15

- 城市B的用户平均转化率为08

- 编码后:

- 城市A →15

- 城市B →08

from sklearn.model_selection import KFold

# 步骤8: 创建因果效应特征 - 用户响应类型(在完整数据集上)

print("步骤8: 创建因果效应特征 - 用户响应类型...")

conditions = [

(data['treatment'] == 1) & (data['conversion'] == 1),

(data['treatment'] == 1) & (data['conversion'] == 0),

(data['treatment'] == 0) & (data['conversion'] == 1),

(data['treatment'] == 0) & (data['conversion'] == 0)

]

choices = ['persuadable', 'lost-cause', 'sure-thing', 'do-not-disturb']

data['response_type'] = np.select(conditions, choices, default='unknown')

# 步骤9: 数据集分割(包含所有特征)

print("\n步骤9: 数据集分割...")

# 为增量建模分割数据集,保持实验组/对照组比例

treatment_data = data[data['treatment'] == 1]

control_data = data[data['treatment'] == 0]

# 分割训练集和测试集

treatment_train, treatment_test = train_test_split(

treatment_data, test_size=TEST_SIZE, random_state=RANDOM_STATE

)

control_train, control_test = train_test_split(

control_data, test_size=TEST_SIZE, random_state=RANDOM_STATE

)

# 合并训练集和测试集(包含所有特征)

train = pd.concat([treatment_train, control_train])

test = pd.concat([treatment_test, control_test])

print(f"训练集大小: {train.shape}, 测试集大小: {test.shape}")

print(f"训练集包含特征: {train.columns.tolist()}")

# 步骤10: 目标编码处理

print("\n步骤10: 目标编码处理...")

# 定义目标编码特征 - 确保这些特征存在于分割后的数据集中

target_encode_features = [

'f0_bin', 'f1_bin', 'f2_bin', 'f3_bin', # 分箱特征

'response_type', # 用户响应类型

'f0_f1', 'f2_div_f3' # 交叉特征

]

# 验证特征是否存在

valid_encode_features = [f for f in target_encode_features if f in train.columns]

missing_features = set(target_encode_features) - set(valid_encode_features)

if missing_features:

print(f"警告: 以下特征在数据集中不存在: {missing_features}")

print("将使用可用特征进行目标编码")

# 定义目标变量

targets = ['conversion', 'visit', 'exposure']

# 创建存储编码器的字典

target_encoders = {}

# K折目标编码函数(修复版)

def kfold_target_encode(train_df, test_df, feature, target, n_folds=5):

"""

使用K折交叉验证进行目标编码

:param train_df: 训练集

:param test_df: 测试集

:param feature: 编码特征

:param target: 目标变量

:param n_folds: 折数

:return: 编码后的训练集和测试集,以及编码映射

"""

# 检查特征是否存在

if feature not in train_df.columns:

print(f" 错误: 特征 '{feature}' 在训练集中不存在")

return train_df, test_df, {}

# 创建编码列名

new_col = f'{feature}_{target}_encoded'

# 在训练集上计算全局均值(作为默认值)

global_mean = train_df[target].mean()

# 创建副本避免修改原始数据

train_encoded = train_df.copy()

test_encoded = test_df.copy()

# 初始化编码列

train_encoded[new_col] = np.nan

test_encoded[new_col] = global_mean # 测试集先用全局均值填充

# K折交叉编码

kf = KFold(n_splits=n_folds, shuffle=True, random_state=RANDOM_STATE)

# 确保特征列存在

if feature not in train_encoded.columns:

print(f" 错误: 特征 '{feature}' 在训练集中不存在")

return train_encoded, test_encoded, {}

for train_index, val_index in kf.split(train_encoded):

# 分割训练集和验证集

X_train = train_encoded.iloc[train_index]

X_val = train_encoded.iloc[val_index]

# 计算编码值(目标均值)

if feature in X_train.columns:

encode_map = X_train.groupby(feature)[target].mean().to_dict()

else:

print(f" 错误: 特征 '{feature}' 在训练子集中不存在")

encode_map = {}

# 应用编码到验证集

if feature in X_val.columns:

val_encoded = X_val[feature].map(encode_map).fillna(global_mean)

train_encoded.iloc[val_index, train_encoded.columns.get_loc(new_col)] = val_encoded.values

# 计算完整训练集的编码映射(用于测试集)

if feature in train_encoded.columns:

full_encode_map = train_encoded.groupby(feature)[target].mean().to_dict()

test_encoded[new_col] = test_encoded[feature].map(full_encode_map).fillna(global_mean)

else:

full_encode_map = {}

test_encoded[new_col] = global_mean

return train_encoded, test_encoded, full_encode_map

# 执行目标编码(仅使用有效特征)

for target in targets:

print(f"\n处理目标变量: {target}")

for feature in valid_encode_features:

print(f" - 编码特征: {feature}")

# 使用K折目标编码

train, test, encoder_map = kfold_target_encode(

train, test, feature, target, n_folds=5

)

if encoder_map: # 仅当编码成功时保存

target_encoders[f'{feature}_{target}_kfold'] = encoder_map

# 保存编码器

import joblib

joblib.dump(target_encoders, 'target_encoders.pkl')

print("\n目标编码器已保存到 target_encoders.pkl")

增量分数特征

在增量建模中,增量分数特征(Uplift Score Feature)是最核心、最具业务价值的特征之一。它直接量化了广告处理对用户转化的因果效应,是增量建模区别于传统预测模型的关键特征。

增量分数(Uplift Score)定义为:实验组转化率 – 对照组转化率

# 步骤11: 创建增量分数特征

# 计算每个特征的增量分数

for col in numerical_cols[:5]:

# 在训练集上计算增量分数

treat_mean = train[train['treatment'] == 1].groupby(f'{col}_bin')['conversion'].mean()

control_mean = train[train['treatment'] == 0].groupby(f'{col}_bin')['conversion'].mean()

uplift_score = (treat_mean - control_mean).to_dict()

# 应用到训练集和测试集

train[f'{col}_uplift_score'] = train[f'{col}_bin'].map(uplift_score)

test[f'{col}_uplift_score'] = test[f'{col}_bin'].map(uplift_score)

业务意义与价值

| 增量分数范围 | 业务解释 | 营销策略 |

| > 0.05 | 高敏感用户 | 优先投放,增加预算 |

| 0.01 – 0.05 | 中等敏感用户 | 适度投放,测试优化 |

| -0.01 – 0.01 | 低敏感用户 | 减少投放,避免打扰 |

| < -0.01 | 反感用户 | 停止投放,防止负面效应 |

# 步骤12: 特征选择(可选)

# 1. 识别并处理非数值型特征

non_numeric_cols = train.select_dtypes(exclude=['number']).columns

print(f"发现非数值型特征: {list(non_numeric_cols)}")

# 2. 将非数值型特征转换为数值型(如果适用)

# 例如,对'response_type'进行标签编码

if 'response_type' in non_numeric_cols:

response_mapping = {

'persuadable': 0,

'lost-cause': 1,

'sure-thing': 2,

'do-not-disturb': 3,

'unknown': 4

}

train['response_type_encoded'] = train['response_type'].map(response_mapping)

test['response_type_encoded'] = test['response_type'].map(response_mapping)

print("已将'response_type'特征编码为数值型")

# 3. 创建仅包含数值型特征的数据集

numeric_cols = train.select_dtypes(include=['number']).columns

train_numeric = train[numeric_cols]

test_numeric = test[numeric_cols]

# 4. 计算特征与目标的相关系数

corr_matrix = train_numeric.corr()

conversion_corr = corr_matrix['conversion'].abs().sort_values(ascending=False)

print("\n与转化率相关性最高的10个特征:")

print(conversion_corr.head(10))

# 5. 可视化相关性(可选)

import matplotlib.pyplot as plt

import seaborn as sns

plt.figure(figsize=(12, 8))

top_features = conversion_corr.head(20).index

sns.heatmap(train_numeric[top_features].corr(), annot=True, fmt=".2f", cmap='coolwarm')

plt.title('Top 20 Features Correlation Matrix')

plt.tight_layout()

plt.savefig('feature_correlation.png')

plt.show()

# 6. 基于相关性选择特征(可选)

# 选择与转化率相关性大于0.01的特征

selected_features = conversion_corr[conversion_corr > 0.01].index.tolist()

print(f"\n选择与转化率相关性>0.01的特征: {len(selected_features)}个")

# 更新训练集和测试集,仅包含选择的特征

selected_features = [f for f in selected_features if f != 'conversion'] # 移除目标变量

train_selected = train[selected_features + target_cols + ['treatment']]

test_selected = test[selected_features + target_cols + ['treatment']]

print(f"选择特征后的训练集大小: {train_selected.shape}")

模型训练与评估

Meta-learner algorithms

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from causalml.inference.meta import BaseSRegressor, BaseTRegressor, BaseXRegressor, BaseRRegressor, BaseDRRegressor

from sklearn.ensemble import RandomForestRegressor

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

from causalml.metrics import auuc_score

import shap

from sklearn.inspection import permutation_importance

# 设置随机种子确保结果可复现

np.random.seed(42)

data = pd.read_csv('data/criteo-data.csv')

# 准备特征和处理变量

feature_columns = ['f0', 'f1', 'f2', 'f3', 'f4', 'f5', 'f6', 'f7', 'f8', 'f9', 'f10', 'f11']

X = data[feature_columns]

W = data['treatment'] # 处理变量:是否分配到实验组

Y = data['conversion'] # 结果变量:是否转化

# 数据验证和修复

print("验证数据质量...")

# 检查处理变量是否只有0和1

if not set(W.unique()).issubset({0, 1}):

print(f"处理变量包含异常值: {set(W.unique())}")

# 修正处理变量

W = W.map({0: 0, 1: 1}).fillna(0).astype(int)

print("已修正处理变量")

# 检查结果变量是否只有0和1

if not set(Y.unique()).issubset({0, 1}):

print(f"结果变量包含异常值: {set(Y.unique())}")

# 修正结果变量

Y = Y.map({0: 0, 1: 1}).fillna(0).astype(int)

print("已修正结果变量")

# 检查特征值范围并标准化

for col in feature_columns:

min_val = X[col].min()

max_val = X[col].max()

if min_val < 0 or max_val > 1:

print(f"特征 {col} 值范围超出[0,1]: [{min_val}, {max_val}]")

# 标准化到[0,1]范围

X[col] = (X[col] - min_val) / (max_val - min_val)

print(f"已将特征 {col} 标准化到[0,1]范围")

# 随机划分训练集和测试集 (70%训练, 30%测试)

X_train, X_test, W_train, W_test, Y_train, Y_test = train_test_split(

X, W, Y, test_size=0.3, random_state=42, stratify=Y

)

print(f"训练集大小: {X_train.shape[0]}")

print(f"测试集大小: {X_test.shape[0]}")

print(f"训练集中转化率: {Y_train.mean():.4f}")

print(f"测试集中转化率: {Y_test.mean():.4f}")

# 使用CausalML实现多种增量模型

print("使用CausalML实现多种增量模型")

# 定义基础模型参数 - 使用随机森林

base_params = {

'n_estimators': 100,

'max_depth': 5,

'random_state': 42

}

# 1. S-Learner

s_learner = BaseSRegressor(learner=RandomForestRegressor(**base_params))

# 2. T-Learner

t_learner = BaseTRegressor(

learner=RandomForestRegressor(**base_params),

control_learner=RandomForestRegressor(**base_params)

)

# 3. X-Learner

x_learner = BaseXRegressor(

learner=RandomForestRegressor(**base_params)

)

# 4. R-Learner

r_learner = BaseRRegressor(

learner=RandomForestRegressor(**base_params)

)

# 5. Doubly Robust (DR) Learner

# 定义基础模型

base_learner = RandomForestRegressor(

n_estimators=100,

max_depth=5,

random_state=42

)

# 创建DR Learner

dr_learner = BaseDRRegressor(

learner=base_learner, # 使用learner参数

control_name=0 # 指定对照组名称

)

# 训练所有模型

models = {

'S-Learner': s_learner,

'T-Learner': t_learner,

'X-Learner': x_learner,

'R-Learner': r_learner,

'DR-Learner': dr_learner

}

for name, model in models.items():

print(f"训练 {name} 模型...")

model.fit(X=X_train, treatment=W_train, y=Y_train)

# 预测增量提升

uplift_predictions = {}

for name, model in models.items():

uplift = model.predict(X=X_test)

# 确保是一维数组

if uplift.ndim > 1:

uplift = uplift.ravel()

uplift_predictions[name] = uplift

# 计算平均增量提升 (ATE)

ate_results = {}

for name, uplift in uplift_predictions.items():

ate = uplift.mean()

ate_results[name] = ate

print(f"{name} 平均增量提升 (ATE): {ate:.6f}")

# 使用Plotly创建可视化 - 增量提升分布比较

# 创建2行3列的子图布局(因为现在有5个模型)

fig_uplift = make_subplots(rows=2, cols=3, subplot_titles=list(uplift_predictions.keys()))

for i, (name, uplift) in enumerate(uplift_predictions.items()):

row = i // 3 + 1

col = i % 3 + 1

fig_uplift.add_trace(

go.Histogram(

x=uplift,

name=name,

nbinsx=50,

opacity=0.7

),

row=row, col=col

)

fig_uplift.add_vline(

x=ate_results[name],

line_dash="dash",

line_color="red",

annotation_text=f"ATE: {ate_results[name]:.4f}",

annotation_position="top right",

row=row, col=col

)

fig_uplift.update_layout(

title='不同模型增量提升分布比较',

height=800,

showlegend=False

)

fig_uplift.show()

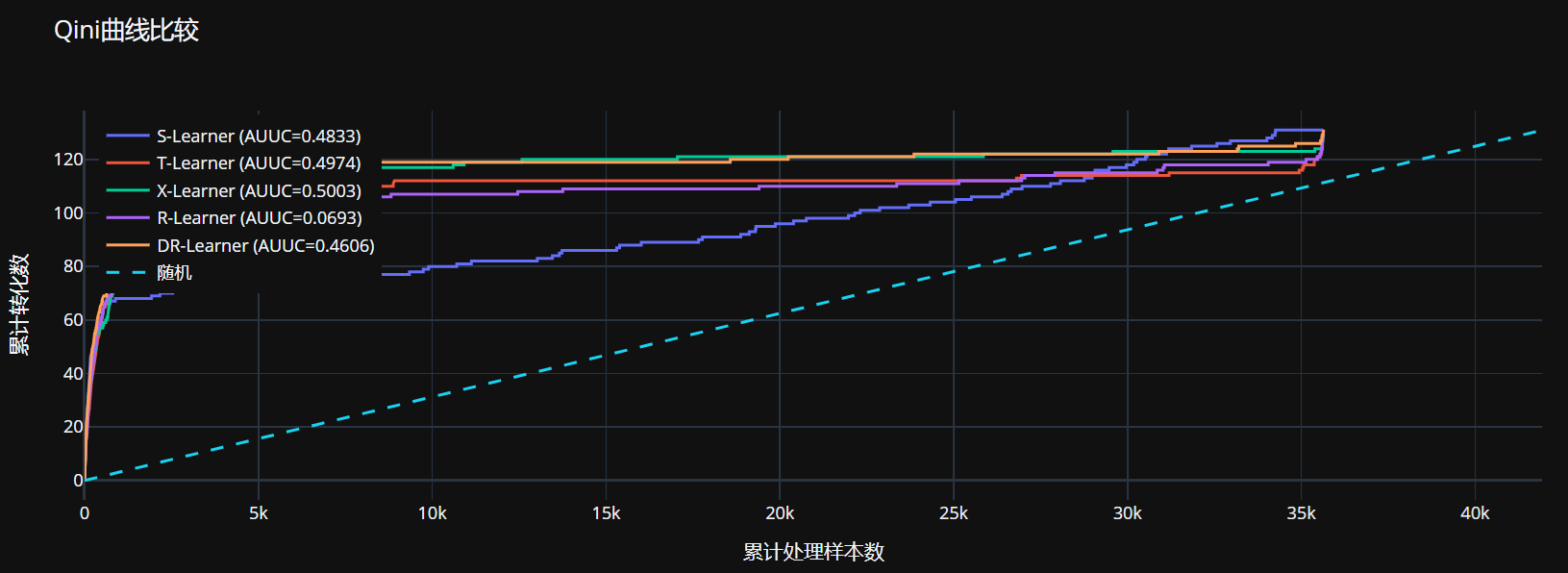

# 评估模型性能 - Qini曲线和AUUC

auuc_results = {}

qini_curves = {}

for name, uplift in uplift_predictions.items():

# 确保所有数组长度一致

min_length = min(len(W_test), len(Y_test), len(uplift))

# 创建包含必要列的DataFrame

auuc_df = pd.DataFrame({

'treatment': W_test.values[:min_length],

'conversion': Y_test.values[:min_length],

'uplift': uplift[:min_length]

})

# 添加模型名称列

auuc_df['model'] = name

# 计算AUUC

auuc_series = auuc_score(auuc_df, outcome_col='conversion', treatment_col='treatment', treatment_effect_col='uplift')

# 提取AUUC值 - 通常是第一个值

auuc_value = auuc_series.iloc[0] if isinstance(auuc_series, pd.Series) else auuc_series

auuc_results[name] = auuc_value

print(f"{name} AUUC: {auuc_value:.4f}")

# 计算Qini曲线数据

qini_df = pd.DataFrame({

'uplift': uplift[:min_length],

'treatment': W_test.values[:min_length],

'conversion': Y_test.values[:min_length]

})

qini_df = qini_df.sort_values('uplift', ascending=False)

qini_df['cumulative_treatment'] = qini_df['treatment'].cumsum()

qini_df['cumulative_conversion'] = qini_df['conversion'].cumsum()

qini_curves[name] = qini_df

# 使用Plotly创建Qini曲线比较

fig_qini = go.Figure()

for name, qini_df in qini_curves.items():

fig_qini.add_trace(go.Scatter(

x=qini_df['cumulative_treatment'],

y=qini_df['cumulative_conversion'],

mode='lines',

name=f"{name} (AUUC={auuc_results[name]:.4f})"

))

# 添加随机线

fig_qini.add_trace(go.Scatter(

x=[0, len(qini_df)],

y=[0, qini_df['conversion'].sum()],

mode='lines',

name='随机',

line=dict(dash='dash')

))

fig_qini.update_layout(

title='Qini曲线比较',

xaxis_title='累计处理样本数',

yaxis_title='累计转化数',

legend=dict(yanchor="top", y=0.99, xanchor="left", x=0.01)

)

fig_qini.show()

S-Learner AUUC: 0.4833 T-Learner AUUC: 0.4974 X-Learner AUUC: 0.5003 R-Learner AUUC: 0.0693 DR-Learner AUUC: 0.4606

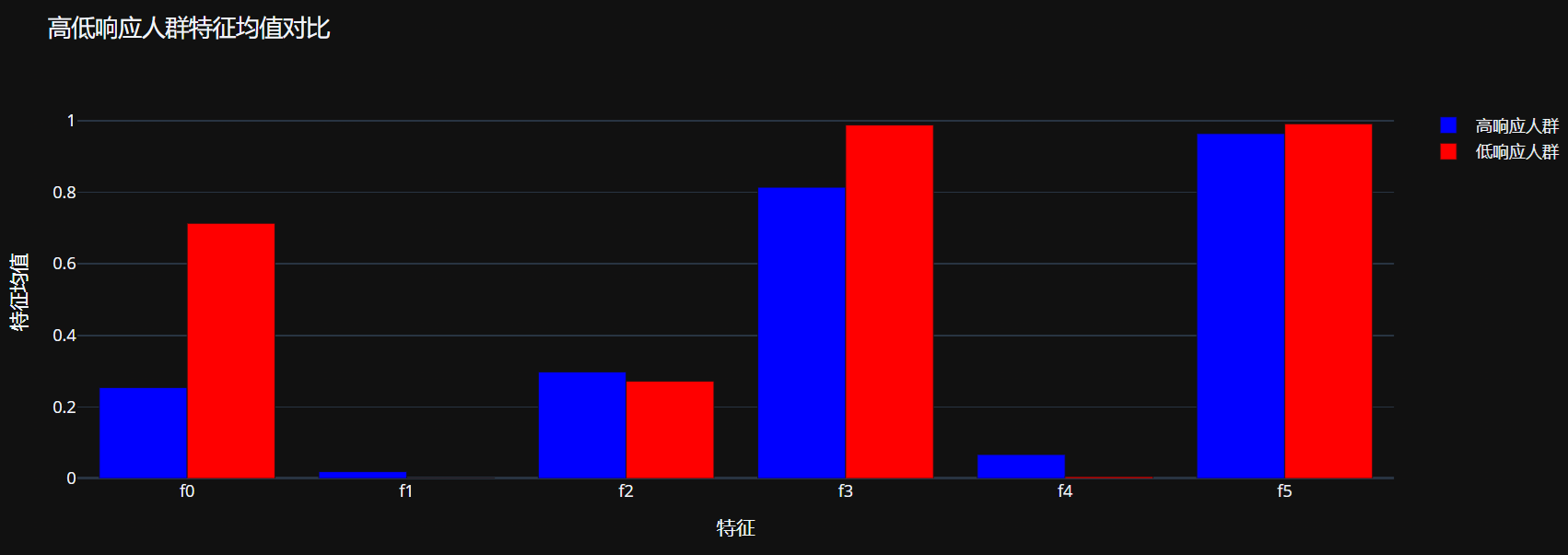

# 选择最佳模型(基于AUUC)

best_model_name = max(auuc_results, key=auuc_results.get)

best_uplift = uplift_predictions[best_model_name]

print(f"最佳模型: {best_model_name} (AUUC={auuc_results[best_model_name]:.4f})")

# 创建结果DataFrame用于后续分析

min_length = min(len(W_test), len(Y_test), len(best_uplift))

uplift_df = pd.DataFrame({

'uplift': best_uplift[:min_length],

'actual_treatment': W_test.values[:min_length],

'actual_conversion': Y_test.values[:min_length]

}, index=X_test.index[:min_length])

# 添加原始特征

for col in feature_columns:

uplift_df[col] = X_test[col].values[:min_length]

# 按增量提升排序

uplift_df_sorted = uplift_df.sort_values('uplift', ascending=False)

# 分析前10%高响应人群

top_10_percent = int(len(uplift_df_sorted) * 0.1)

high_response = uplift_df_sorted.head(top_10_percent)

low_response = uplift_df_sorted.tail(top_10_percent)

print(f"高响应人群 (前10%) 平均增量提升: {high_response['uplift'].mean():.6f}")

print(f"低响应人群 (后10%) 平均增量提升: {low_response['uplift'].mean():.6f}")

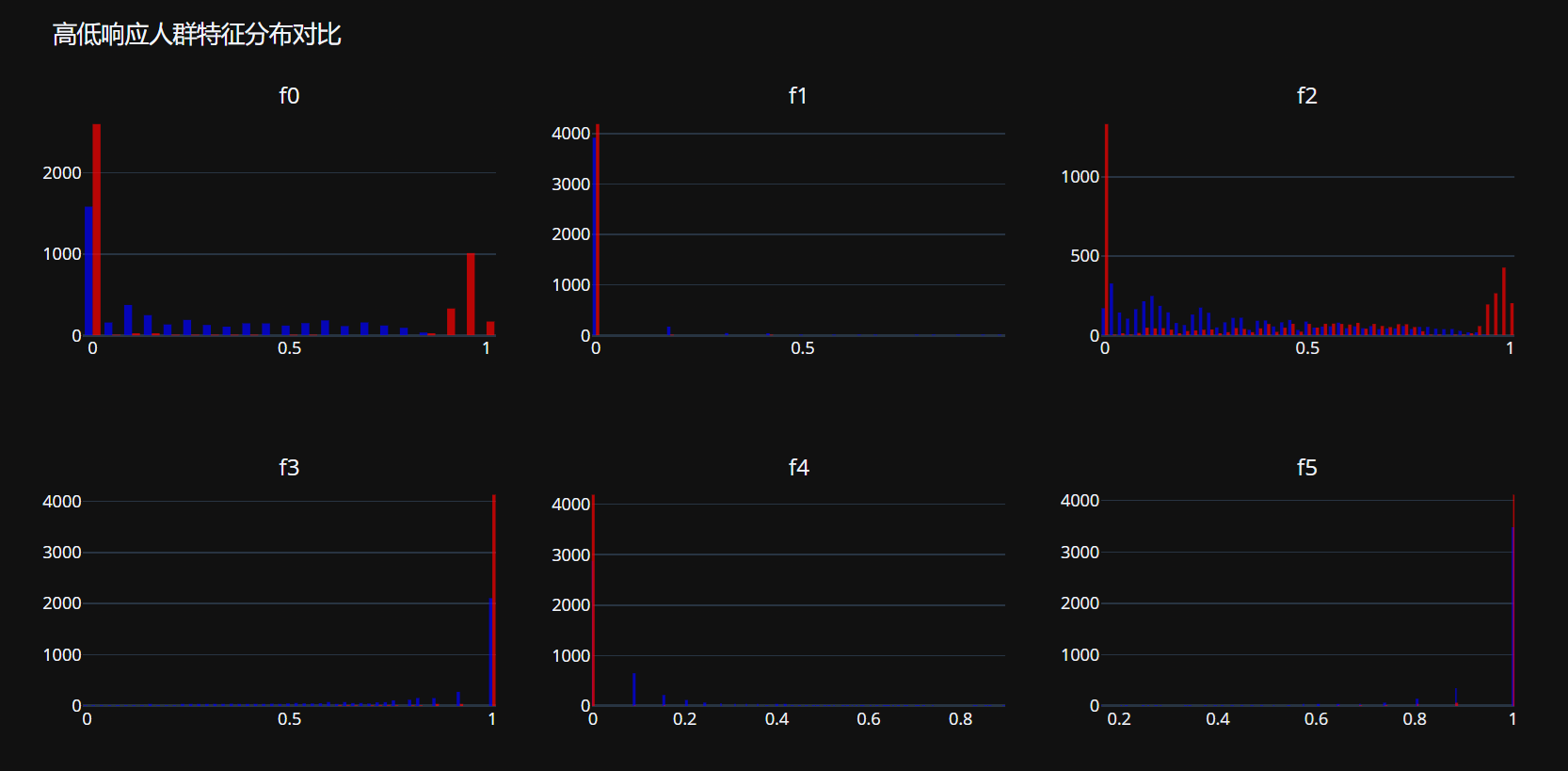

最佳模型: X-Learner (AUUC=0.5003) 高响应人群 (前10%) 平均增量提升: 0.013861 低响应人群 (后10%) 平均增量提升: -0.000464

# 使用Plotly创建高低响应人群特征对比可视化

feature_comparison_data = []

for feature in feature_columns[:6]: # 只展示前6个特征

feature_comparison_data.append({

'feature': feature,

'high_response_mean': high_response[feature].mean(),

'low_response_mean': low_response[feature].mean()

})

feature_comparison_df = pd.DataFrame(feature_comparison_data)

# 创建特征对比条形图

fig_features = go.Figure()

fig_features.add_trace(go.Bar(

x=feature_comparison_df['feature'],

y=feature_comparison_df['high_response_mean'],

name='高响应人群',

marker_color='blue'

))

fig_features.add_trace(go.Bar(

x=feature_comparison_df['feature'],

y=feature_comparison_df['low_response_mean'],

name='低响应人群',

marker_color='red'

))

fig_features.update_layout(

title='高低响应人群特征均值对比',

xaxis_title='特征',

yaxis_title='特征均值',

barmode='group'

)

fig_features.show()

# 评估增量提升模型的业务价值

# 模拟只对高响应人群投放广告的场景

targeted_group = high_response.copy()

targeted_group['targeted'] = 1 # 标记为投放目标

# 对照组:随机选择相同数量的人群

control_group = uplift_df_sorted.sample(n=top_10_percent, random_state=42)

control_group['targeted'] = 0 # 标记为非投放目标

# 合并两组数据

simulation_df = pd.concat([targeted_group, control_group])

# 计算业务指标

targeted_conversion_rate = simulation_df[simulation_df['targeted'] == 1]['actual_conversion'].mean()

control_conversion_rate = simulation_df[simulation_df['targeted'] == 0]['actual_conversion'].mean()

print(f"目标组转化率: {targeted_conversion_rate:.6f}")

print(f"对照组转化率: {control_conversion_rate:.6f}")

print(f"提升效果: {(targeted_conversion_rate - control_conversion_rate):.6f}")

print(f"相对提升: {(targeted_conversion_rate - control_conversion_rate)/control_conversion_rate*100:.2f}%")

# 使用Plotly创建业务模拟结果可视化

simulation_results = pd.DataFrame({

'group': ['目标组', '对照组'],

'conversion_rate': [targeted_conversion_rate, control_conversion_rate]

})

fig_simulation = px.bar(

simulation_results,

x='group',

y='conversion_rate',

title='目标组 vs 对照组转化率对比',

labels={'conversion_rate': '转化率', 'group': '组别'},

color='group',

text_auto='.4f'

)

fig_simulation.show()

目标组转化率: 0.025996 对照组转化率: 0.003577 提升效果: 0.022418 相对提升: 626.67%

# 特征重要性分析 - 使用最佳模型

if best_model_name == 'S-Learner':

# S-Learner的特征重要性

if hasattr(s_learner.learner, 'feature_importances_'):

feature_importances = s_learner.learner.feature_importances_

feature_importance = pd.DataFrame({

'feature': feature_columns + ['treatment'],

'importance': feature_importances

}).sort_values('importance', ascending=False)

else:

print("警告: S-Learner模型没有特征重要性属性")

feature_importance = pd.DataFrame({

'feature': feature_columns + ['treatment'],

'importance': np.zeros(len(feature_columns) + 1)

})

elif best_model_name == 'T-Learner':

# T-Learner的特征重要性 - 取平均

if hasattr(t_learner.models[1], 'feature_importances_') and hasattr(t_learner.models[0], 'feature_importances_'):

importance_treatment = t_learner.models[1].feature_importances_

importance_control = t_learner.models[0].feature_importances_

avg_importance = (importance_treatment + importance_control) / 2

feature_importance = pd.DataFrame({

'feature': feature_columns,

'importance': avg_importance

}).sort_values('importance', ascending=False)

else:

print("警告: T-Learner模型没有特征重要性属性")

feature_importance = pd.DataFrame({